Citation and Attribution in RAG Outputs: How to Build Trustworthy LLM Responses

When an AI gives you an answer, how do you know it’s true? If it cites a source, is that source real? In 2025, this isn’t just a technical question-it’s a business requirement. Companies using Retrieval-Augmented Generation (RAG) systems to power customer support, legal research, and financial analysis can’t afford hallucinations. And the only way to fix that is with citation and attribution-not as an afterthought, but as the core of every response.

Why Citations Are Non-Negotiable in RAG Systems

Early LLMs were great at sounding confident. They’d invent facts, fabricate studies, and confidently quote nonexistent papers. That worked for casual chat, but not for enterprise use. By 2023, companies realized: if your AI can’t prove where its answers come from, it’s not trustworthy-it’s dangerous. Research from April 2025 shows baseline RAG systems still get citations wrong in nearly 39% of cases. That’s not a bug. It’s a design flaw. If your system pulls text from a document but misattributes it to the wrong source, users lose trust. And once trust is gone, adoption stops. The solution isn’t just adding footnotes. It’s building a system where every generated sentence can be traced back to a specific piece of source material-with the right title, date, and context. This isn’t about being academic. It’s about compliance, liability, and user confidence.How Citations Actually Work in RAG

A RAG system doesn’t just generate text. It retrieves, then generates. Here’s the step-by-step flow:- Query comes in: “What’s the latest FDA guidance on AI diagnostics?”

- Vector database searches for relevant chunks-say, from a PDF of FDA documents.

- Relevant text snippets are pulled, each tagged with metadata: title, author, date, source URL.

- The LLM uses those snippets to build a response.

- The system injects citations: “According to ‘FDA AI Diagnostic Guidelines v3.1, March 2024’…”

What Makes a Good Citation? Four Rules

Not all citations are equal. Here’s what works in real-world systems:- Source Title Only - Don’t link to raw URLs. Users don’t care about bit.ly/abc123. They care about “NIST Cybersecurity Framework 2024.” TypingMind’s users reported 89% fewer hallucinated links when only source titles were shown.

- Include Date - A regulation from 2020 isn’t valid in 2025. Citations without dates are misleading. Metadata must include publication or revision date.

- Use Consistent Formatting - If one citation says “(Source: Title, 2024)” and another says “Retrieved from Title, 2024,” users get confused. Pick one style and stick to it.

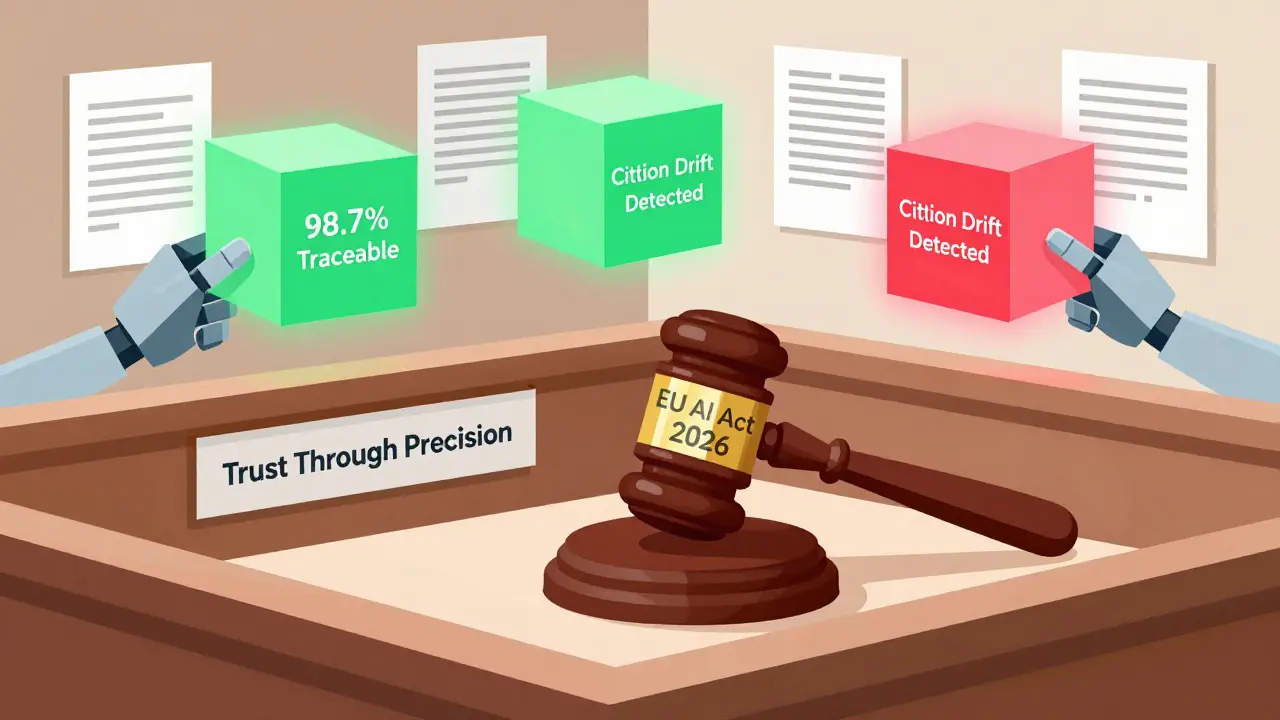

- Link to the Original - Even if you only show the title, make sure the system can map it back to the exact document. Milvus vector database version 2.3.3 achieves 98.7% traceability by storing metadata pointers with each chunk.

Tools That Actually Work (And Which One to Pick)

You don’t need to build this from scratch. Frameworks like LlamaIndex and LangChain have built-in citation engines. But they’re not plug-and-play.- LlamaIndex - Uses 512-character chunks as the default “sweet spot.” This balances context and precision. Their citation query engine hits 82.4% accuracy out-of-the-box, but only if your data is clean. If your documents are messy PDFs with headers, footers, and page numbers, accuracy drops fast.

- LangChain - More flexible, but requires more configuration. Better for teams with custom data pipelines.

- TypingMind - A commercial platform that’s already solved the hard parts. Their system achieves 89.7% Precision@5 for citation retrieval. Users rate its citation feature 4.7/5. It’s not open-source, but if you need it to work now, it’s the fastest path.

The Hidden Problem: Citation Drift

Most people think citation errors happen at retrieval. But the biggest issue? Drift. In multi-turn conversations, the LLM starts paraphrasing. It summarizes. It combines ideas from three different documents. Suddenly, the citation points to “Document A,” but the sentence is actually a blend of A, B, and C. That’s called citation drift. It affects 49% of enterprise RAG systems, according to user surveys. And it’s invisible to basic citation systems. AWS’s new Bedrock RAG integration (May 2025) tackles this with automatic disambiguation. It flags when a response combines multiple sources and prompts the system to cite all of them. Early tests show a 39.7% drop in citation errors in technical documentation. LlamaIndex’s upcoming “Citation Pro” feature, announced in April 2025, will dynamically adjust chunk size from 256 to 1024 characters based on content complexity. That means dense legal text gets bigger chunks; simple FAQs get smaller ones. Early results show 18.3% better accuracy.

What You Must Do Before You Deploy

Don’t start coding yet. Do this first:- Clean your source data - Remove irrelevant content. TypingMind found that cleaning data reduces citation errors by 28%. Delete footnotes, disclaimers, and boilerplate.

- Standardize titles and metadata - Use consistent naming: “[Topic]_[Version]_[Date].pdf.” Include author, date, and source type in metadata.

- Add section summaries - AWS found this increases semantic coverage by 47% and cuts citation drift by 33%.

- Test with real queries - Don’t just test with “What is RAG?” Test with: “What are the 2025 SEC rules on AI disclosures for public companies?” Then check: Can a user find the source?

Where This Is Going: Regulation, Standards, and the Future

This isn’t just a technical trend. It’s a legal one. The EU AI Act, effective February 2026, requires source attribution for any factual claim made by AI. Non-compliance means fines up to 7% of global revenue. That’s not a suggestion. It’s a law. Industry is responding. The RAG Citation Consortium, formed in January 2025 with 47 members including Microsoft, AWS, and LlamaIndex, is drafting machine-readable citation standards. Think RFCs for AI citations-structured, parseable, and verifiable. Market data confirms the shift. Gartner reports 83% of enterprise RAG deployments now include citations, up from 47% in late 2024. The market for citation-enhanced tools is projected to hit $2.8 billion by 2027. Adoption is uneven. Financial services (78%), legal tech (82%), and healthcare (65%) are leading. Creative industries? Only 31%. Why? Because compliance matters more than creativity.Final Reality Check

You can’t just add citations to a broken system. If your data is messy, your chunks are too big, your metadata is missing, or your prompts are vague-your citations will be noise. The goal isn’t to show more citations. It’s to show the right ones. Every time. Start small. Pick one use case: customer support FAQs. Clean the documents. Set chunk size to 512. Use source titles only. Test with 50 real questions. Measure how many citations users can actually verify. If you get 90%+ accuracy, scale it. If you’re below 70%, go back to data prep. No framework, no model, no vendor can fix bad input. Trust isn’t built with fancy algorithms. It’s built with clean data, clear labels, and consistent formatting. That’s the real edge in RAG-and it’s the only one that lasts.- Nov, 29 2025

- Collin Pace

- 6

- Permalink

Written by Collin Pace

View all posts by: Collin Pace