Generative Innovation Hub

Market Forecast: Adoption Scenarios for Vibe Coding Through 2030

Vibe coding is transforming software development by letting humans create apps through speech and visuals instead of typing code. By 2030, 40% of enterprise software will be built this way-but adoption depends on fixing security, cost, and training gaps.

- Mar 6, 2026

- Collin Pace

- 0

- Permalink

Travel and Hospitality with Generative AI: Itineraries, Offers, and Service Recovery

Generative AI is reshaping travel and hospitality in 2026 by creating personalized itineraries, hyper-targeted offers, and predictive service recovery. Travelers now experience seamless, anticipatory service-while businesses that fail to adapt risk being left behind.

- Mar 5, 2026

- Collin Pace

- 1

- Permalink

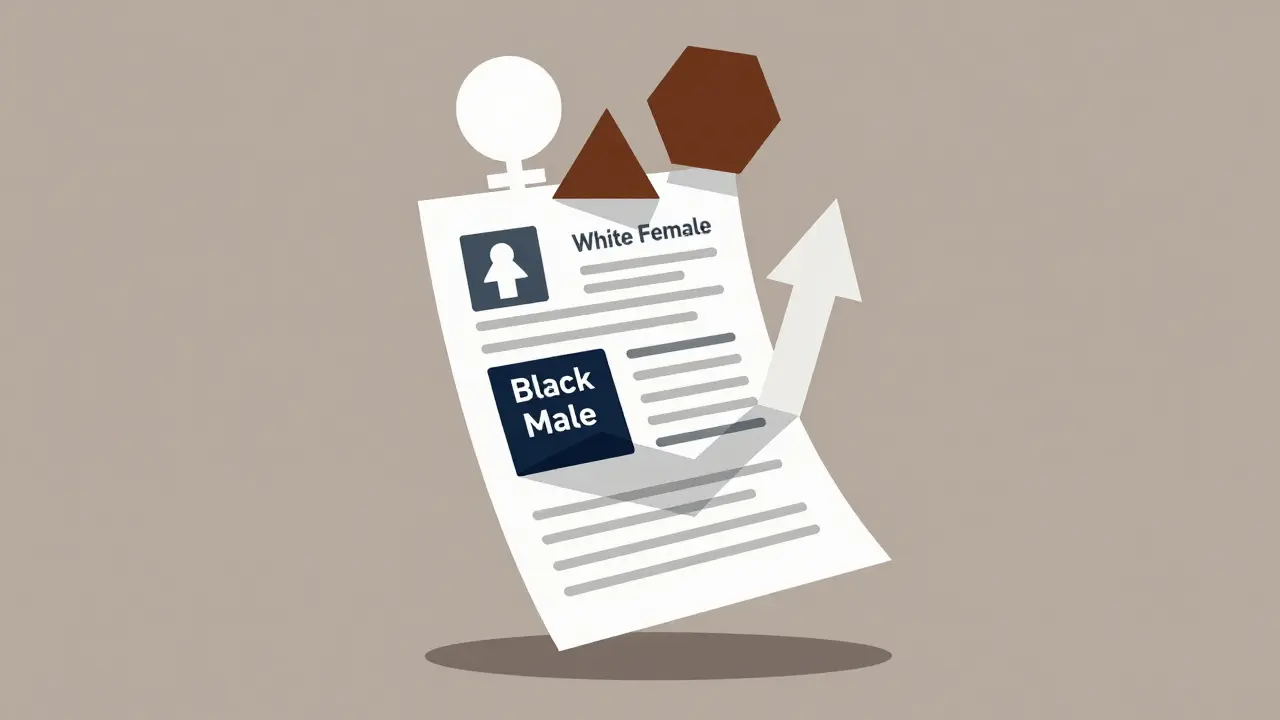

How to Measure Gender and Racial Bias in Large Language Model Outputs

Large language models show measurable gender and racial bias in hiring simulations, favoring white women and penalizing Black men. Despite debiasing efforts, these biases persist-and they have real consequences for employment outcomes.

- Mar 3, 2026

- Collin Pace

- 4

- Permalink

When to Compress vs When to Switch Models in Large Language Model Systems

Learn when to compress a large language model versus switching to a smaller one. Discover practical trade-offs in cost, accuracy, and hardware that shape real-world AI deployments.

- Mar 2, 2026

- Collin Pace

- 6

- Permalink

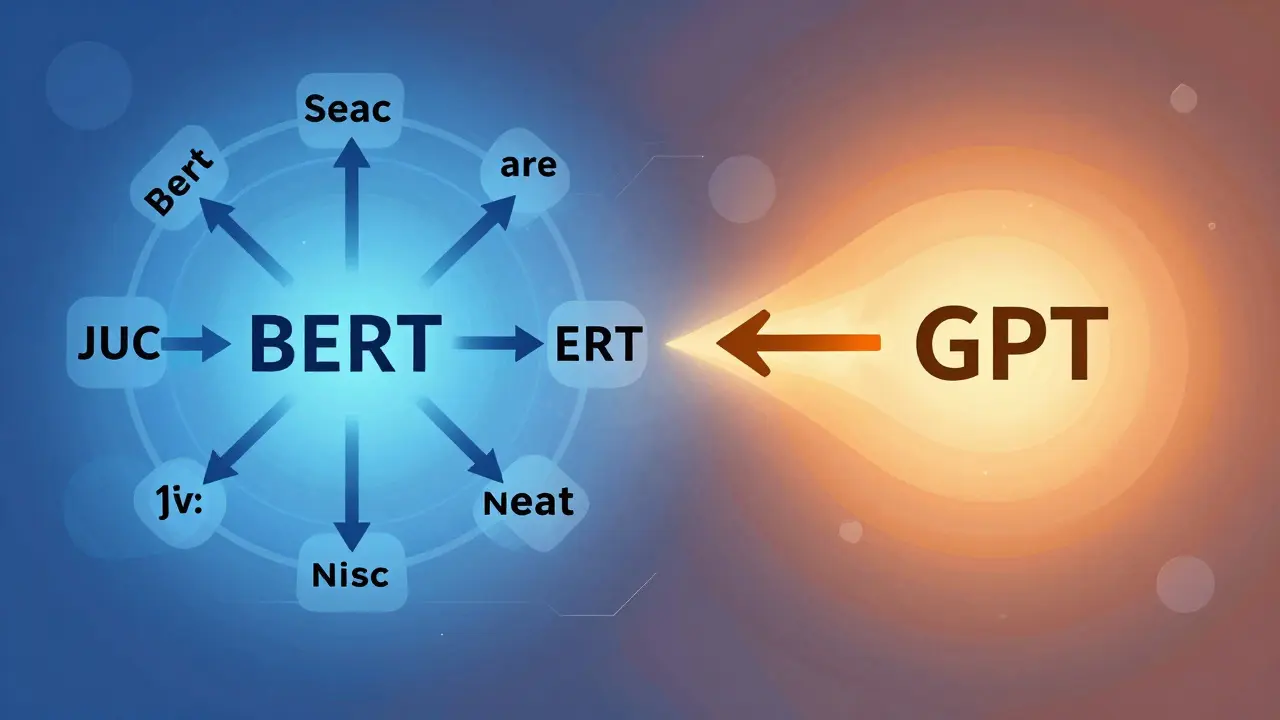

BERT vs GPT: How Encoder-Only and Decoder-Only Models Shape Modern NLP

BERT and GPT revolutionized NLP with opposite approaches: BERT understands language bidirectionally, while GPT generates text autoregressively. Learn how their architectures shape real-world AI applications today.

- Feb 27, 2026

- Collin Pace

- 7

- Permalink

Designers to Builders: How Vibe Coding Turns Figma Designs into Real Apps

Vibe coding turns Figma designs into working code using AI, letting designers skip the handoff and build prototypes in minutes. Learn how it works, which tools deliver, and why it’s not replacing developers-but changing how teams move.

- Feb 26, 2026

- Collin Pace

- 6

- Permalink

Video Understanding with Generative AI: Captioning, Summaries, and Scene Analysis

Generative AI now automatically captions, summarizes, and analyzes video content with 89%+ accuracy. Learn how models like Google's Gemini 2.5 work, their real-world limits, and what's coming in 2026.

- Feb 25, 2026

- Collin Pace

- 7

- Permalink

How Context Windows Work in Large Language Models and Why They Limit Long Documents

Context windows limit how much text large language models can process at once, affecting document analysis, coding, and long conversations. Learn how they work, why they're a bottleneck, and how to work around them.

- Feb 23, 2026

- Collin Pace

- 0

- Permalink

Security Vulnerabilities and Risk Management in AI-Generated Code

AI-generated code is now common in software development, but it introduces serious security risks like SQL injection, hardcoded secrets, and XSS. Learn how to detect and prevent these vulnerabilities with automated tools, code reviews, and policy changes.

- Feb 20, 2026

- Collin Pace

- 7

- Permalink

Pair Reviewing with AI: Human + Model Code Review Workflows

AI code review tools now pair with human developers to catch bugs faster, reduce review fatigue, and improve code quality. Learn how Microsoft, Greptile, and CodeRabbit are transforming pull request workflows with AI-assisted reviews.

- Feb 18, 2026

- Collin Pace

- 8

- Permalink

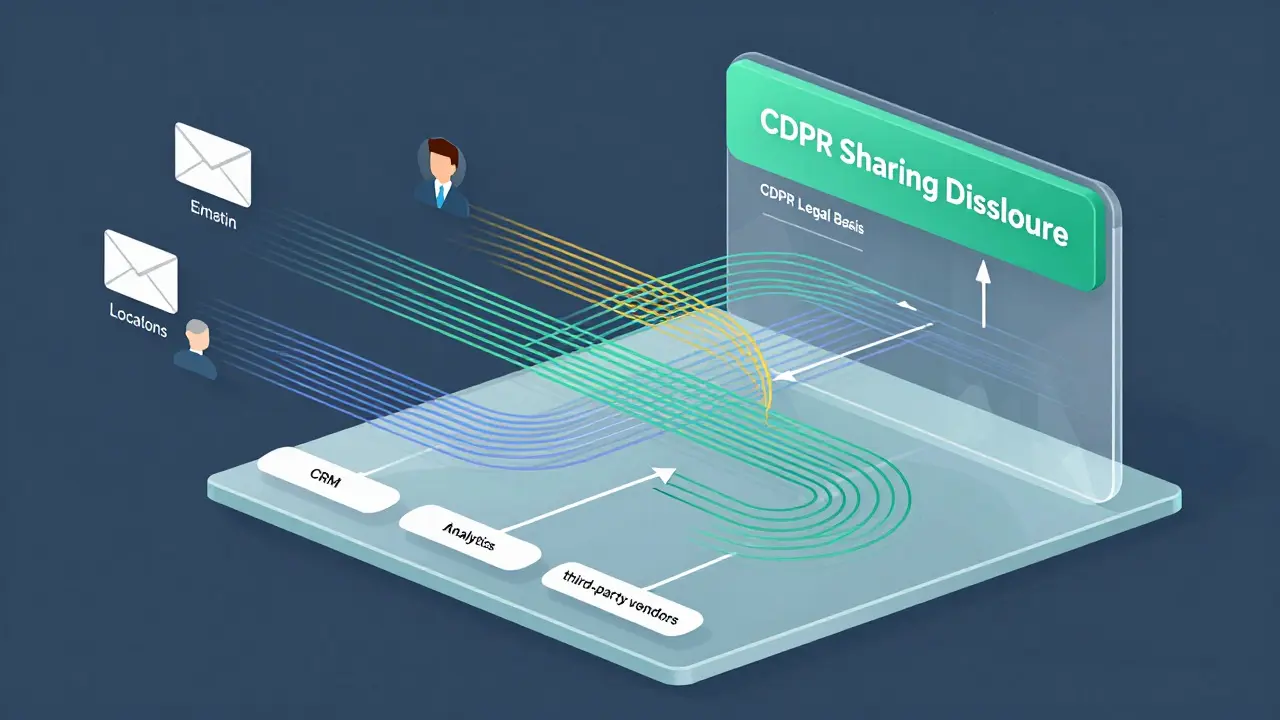

GDPR and CCPA Compliance in Vibe-Coded Systems: Data Mapping and Consent Flows

GDPR and CCPA require detailed data mapping and consent management to avoid fines and ensure compliance. Learn how to build systems that track data flows, document legal bases, and honor user rights-without relying on guesswork.

- Feb 17, 2026

- Collin Pace

- 7

- Permalink

Democratization of Software Development Through Vibe Coding: Who Can Build Now

Vibe coding lets anyone build software using plain language instead of code. From teachers to small business owners, non-developers are now creating functional apps in hours - not months. Here’s how it works, who’s using it, and what you need to know.

- Feb 15, 2026

- Collin Pace

- 5

- Permalink