Comparing LLM Provider Prices: OpenAI, Anthropic, Google, and More in 2026

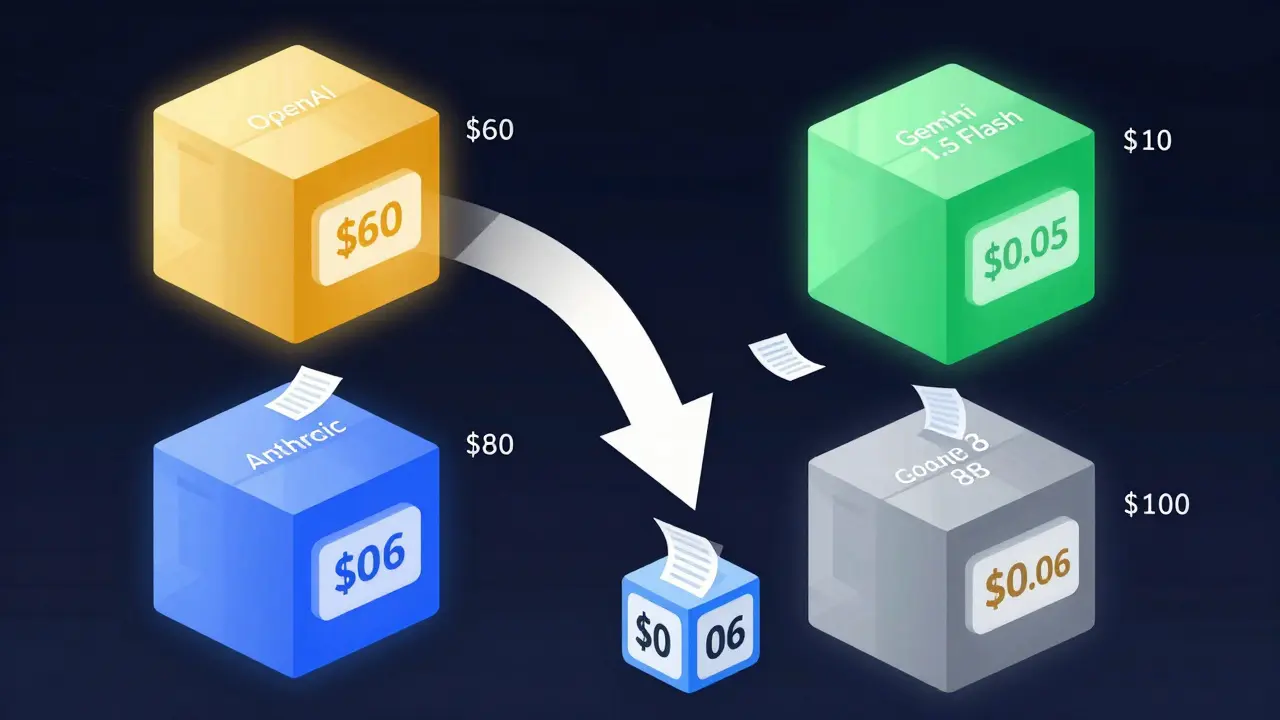

By early 2026, running a chatbot or processing documents with an LLM doesn’t cost what it did two years ago. In fact, prices have dropped by 98% since GPT-4 first launched. What used to cost $60 per million tokens now runs as low as $0.05 - but not all models are created equal. Choosing the right provider isn’t just about the lowest number. It’s about matching cost to capability, context, and real-world performance.

How LLM Pricing Works Now

Today, almost every major provider charges by the token - not by the word, not by the minute, not by the request. A token is roughly 4 characters of text. That means "hello" is two tokens. A 10,000-word document? That’s around 13,000 tokens. You pay separately for what you send in (input) and what you get back (output).

Some providers, like Anthropic, add extra layers: cache reads and batch discounts. If you ask the same question twice, you might pay less the second time. Others, like Google, throw in a massive 1 million-token context window for free. OpenAI bundles multimodal features (image input, JSON output) into higher tiers. And Meta’s Llama 3? It’s dirt cheap - but only if you’re okay with using third-party platforms like AWS or Hugging Face.

Here’s the catch: if you waste context, you’re wasting money. Sending 500,000 tokens of irrelevant history just because you didn’t trim it? That could double your bill. One enterprise study found 68% of teams over-padded their prompts, burning 30-50% of their budget before the model even started thinking.

Frontier Models: Power at a Premium

If you need top-tier reasoning - legal analysis, complex coding, multi-step planning - you’re looking at the premium tier. These models are built for accuracy, not economy.

- GPT-4o ($5.00 input / $15.00 output per million tokens): Still the gold standard for reasoning. Used by 42% of Fortune 500 companies for high-stakes tasks. Its 128K context window handles long reports, but doesn’t match Google’s 1M.

- Claude Opus ($15.00 input / $75.00 output): The most expensive. Used in regulated industries like finance and healthcare. Why? It nails nuance. But a single 500,000-token document analysis costs $7.50 just to read - not even including output.

- GPT-4 Turbo ($10.00 / $30.00): Older, but still reliable. Often used for legacy integrations where upgrading code isn’t worth the risk.

These aren’t for chatbots. They’re for when a single mistake costs money. A bank using Claude Opus to review loan applications might spend $2,000/month - but avoid $500,000 in fraud.

Mid-Tier Models: The Sweet Spot

Most businesses don’t need the absolute best. They need the best value. This is where the real battle is.

- Claude 3.5 Sonnet ($2.50 input / $3.125 output): The quiet winner. It matches 92% of GPT-4o’s performance on MMLU benchmarks but costs 40% less. Anthropic’s cache system saves money on repeated queries - a huge win for customer service workflows. One fintech startup cut costs by 38% switching from GPT-4o to Sonnet.

- Gemini 1.5 Flash ($0.35 input / $1.05 output): Google’s sleeper hit. It’s not the smartest, but its 1 million-token context window is unmatched. If you’re analyzing contracts, research papers, or entire codebases, this slashes API calls. One legal tech firm went from 12 calls per document to 3.

- Llama 3 70B ($0.70 input / $0.90 output): Available via AWS Bedrock, Azure, and others. It’s open-source, so prices vary. Some providers charge 30% more than Meta’s direct API. Still, for pure text processing, it’s hard to beat.

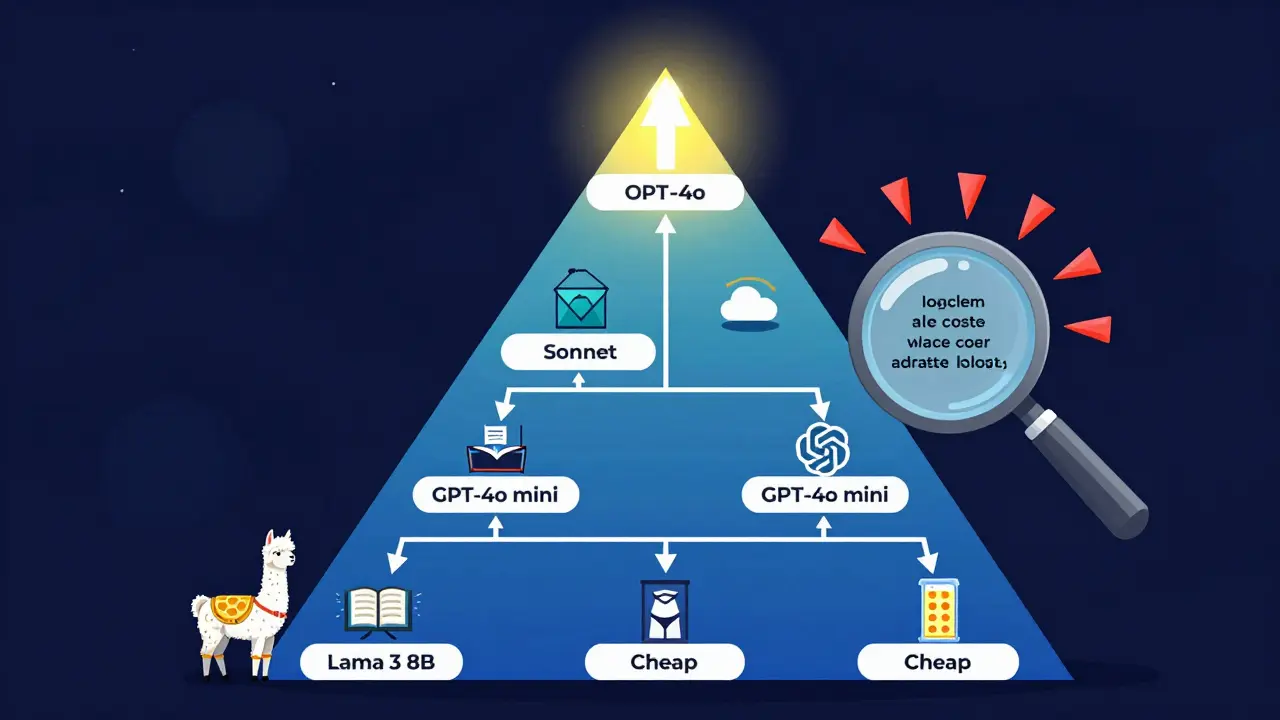

Dr. Elena Rodriguez from Gartner says the smartest move is a cascade: use Sonnet for 80% of tasks, and only escalate the tricky 20% to Opus. That cuts costs to 35% of what you’d spend going all-in on premium.

Budget Models: Cheap, But Not Always Cheap Enough

These are the workhorses. They handle simple tasks well - FAQs, content summarization, basic classification. But they crack under pressure.

- GPT-4o mini ($0.15 input / $0.60 output): OpenAI’s budget option. 128K context. Good for internal tools. One SaaS company reduced its monthly LLM bill from $1,200 to $180 switching to this.

- Claude 3 Haiku ($0.25 input / $1.25 output): Fastest model on the market. But it fails 37% of complex coding tasks that Sonnet handles easily. Great for chatbots. Terrible for contract review.

- Llama 3 8B ($0.06-$0.10 input/output): The cheapest option. Available on SiliconFlow and Hugging Face. Only 8K context. You’ll need to chunk everything. But if you’re building a hobby project or testing ideas? This is the go-to.

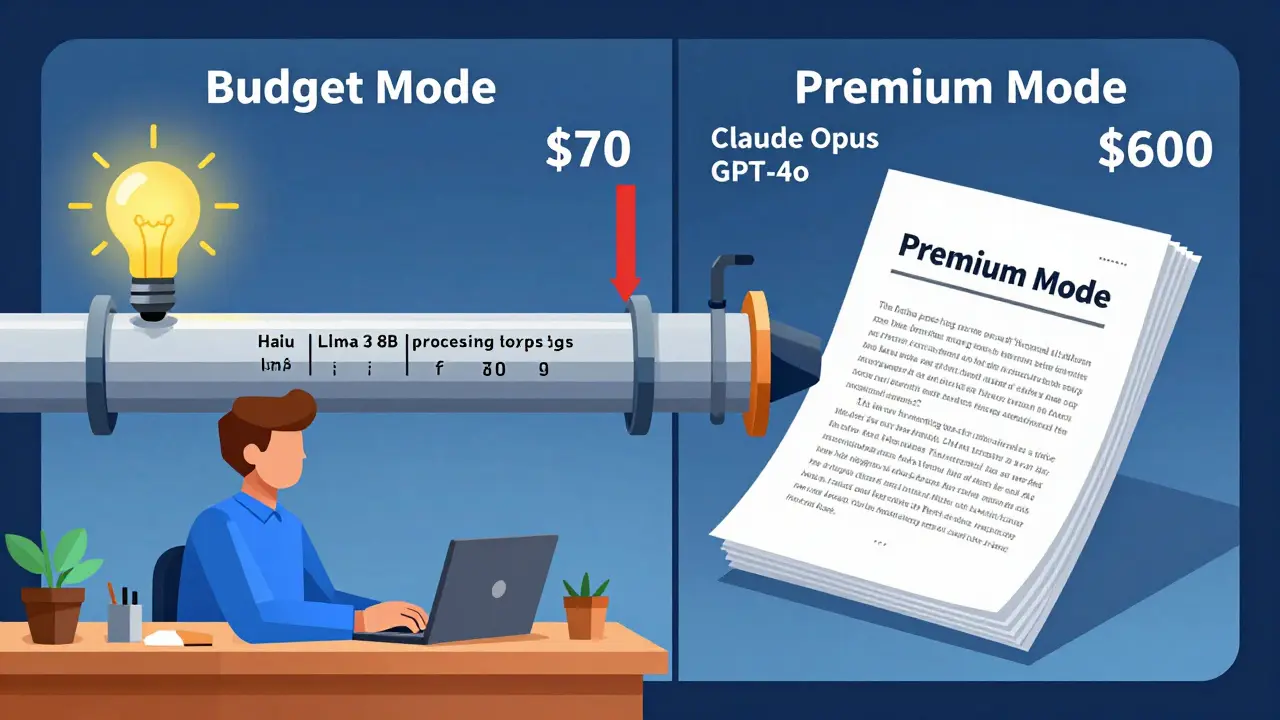

Reddit user u/AI_Builders switched their entire customer support bot from GPT-4o to Haiku. Monthly cost: $600 → $70. Accuracy dropped 8% - but only on edge cases. Most users never noticed.

Hidden Costs and Real-World Pitfalls

Price tags don’t tell the whole story.

Context windows: If your model can only handle 8K tokens and your document is 120K, you need 15 API calls. Each call adds latency and cost. Gemini’s 1M window? That’s one call.

Token counting: Different providers count tokens differently. The same sentence might be 100 tokens on OpenAI, 112 on Anthropic. That 12% variation adds up fast.

Non-English text: Chinese, Arabic, or Korean? Expect 25-40% more tokens than English. Budget for it.

Retries: If a model gives a bad answer, you ask again. That doubles your cost. MIT’s AI Economics Lab found that Claude 3.5 Sonnet had 18% lower cost-per-useful-output (CPUT) than GPT-4o - not because it was cheaper per token, but because it got it right the first time.

And then there’s the cache. Anthropic charges less for cached responses - but only if you understand how their system works. One team spent three weeks debugging why their costs spiked. Turns out, they weren’t sending the right cache keys.

Who Should Use What?

Here’s a quick guide:

- Startups & side projects: Use Llama 3 8B or GPT-4o mini. You’re not processing legal docs. You’re testing ideas. Save every penny.

- Customer support bots: Claude Haiku or GPT-4o mini. They handle 80% of queries perfectly. Let the human agents take the rest.

- Document processing (contracts, reports): Gemini 1.5 Flash or Claude 3.5 Sonnet. Long context is non-negotiable. Don’t risk splitting files.

- Finance, legal, healthcare: Claude Opus or GPT-4o. Accuracy matters more than cost. A single error here could cost millions.

- Enterprise hybrid: Use a cascade. Haiku for simple, Sonnet for complex. Gartner says this is now the standard for 67% of Fortune 500 companies.

What’s Next? The Price War Isn’t Over

Prices are still falling. Meta’s Llama 3.1 8B just dropped to $0.06 per million tokens - down 40% in a month. OpenAI plans consumption-based tiers by Q3 2026. Anthropic is overhauling its cache system to make pricing clearer. Google promises to match competitors by Q4.

Forrester predicts another 50% drop by end of 2026. Why? Open-source models are eating market share. Llama 3 captured 19% of new enterprise contracts in Q1 2026. Providers that don’t cut prices 25% every quarter? They’re fading.

Don’t just pick the cheapest. Pick the one that fits your task. A $10 model that fails 20% of the time costs more than a $3 model that gets it right.

Which LLM provider is cheapest overall?

Llama 3 8B, available through SiliconFlow or Hugging Face, is currently the cheapest at $0.06 per million tokens for both input and output. But it only handles 8K context, so it’s only ideal for very short tasks. For longer documents, Gemini 1.5 Flash at $0.35 input/$1.05 output offers better value.

Is Claude more expensive than OpenAI?

It depends on the model. Claude Opus is more expensive than GPT-4o. But Claude 3.5 Sonnet is cheaper than GPT-4o and performs nearly as well. OpenAI’s pricing is simpler - no cache discounts - so it’s easier to predict. Anthropic’s system saves money if you use it right, but can surprise you if you don’t.

Should I use GPT-4o mini for everything?

No. GPT-4o mini is great for simple tasks like summarizing emails or answering FAQs. But it struggles with complex reasoning, coding, or long documents. If you’re doing anything that requires deep analysis, you’ll get worse results - and end up paying more because you need to retry or switch models anyway.

Why does context window size matter for cost?

If your model can only process 8K tokens at a time, but your document is 100K, you have to split it into 12 pieces. Each split = one API call. Each call has overhead - latency, network cost, retry risk. Gemini 1.5 Flash’s 1M context means you send one piece. That’s 12x fewer calls - and 12x lower cost.

Do I need to pay extra for image input or JSON output?

Yes. Multimodal features (like image input) and structured output (like JSON) are bundled into higher-tier models. For example, GPT-4o includes them. GPT-4o mini doesn’t. If you need them, you’ll pay 40% more than the base price. Always check if your use case requires these features before choosing a model.

How can I reduce my LLM costs?

Trim context. Only send what’s needed. Use caching where available. Combine models - use budget models for simple tasks, premium for complex ones. Monitor token usage. And never assume your prompt length equals token count - use a tokenizer tool to check.

Next Steps

If you’re just starting out, test three models: GPT-4o mini, Claude Haiku, and Gemini 1.5 Flash. Run the same 10 tasks on each. Track cost, speed, and accuracy. You’ll quickly see which one fits your needs.

For teams already using LLMs, audit your prompts. Are you sending 50,000 tokens of history? Are you using the right model for each job? Are you caching repeated queries? Fix those three things, and you’ll cut your bill by 50% in a week.

The AI race isn’t about who’s smartest anymore. It’s about who’s smartest at spending less. And right now, the winners aren’t the ones with the flashiest models - they’re the ones who know how to use them wisely.

- Feb, 7 2026

- Collin Pace

- 1

- Permalink

Written by Collin Pace

View all posts by: Collin Pace