Confidential Computing for Privacy-Preserving LLM Inference: How Secure AI Works Today

Imagine sending your medical records to an AI doctor that can spot early signs of disease-but you never have to worry the model ever sees your name, address, or diagnosis. Or picture a bank using an AI to analyze millions of loan applications without exposing customer data to anyone, not even the engineers who built the system. This isn’t science fiction. It’s confidential computing for LLM inference-and it’s already running in production for some of the most sensitive industries in the world.

Large language models are powerful. But they’re also dangerous if your data isn’t protected. Traditional encryption keeps data safe when it’s stored or moving across networks. But once it lands in the server’s memory to be processed? It’s wide open. That’s the gap confidential computing fills. It creates a hardware-enforced safe zone inside the CPU or GPU where your data and the AI model’s weights stay encrypted-even from the cloud provider, the operating system, or even root-level attackers.

How Confidential Computing Actually Works

At its core, confidential computing relies on Trusted Execution Environments (TEEs). These are tiny, isolated regions inside modern processors that act like digital vaults. Intel’s Trust Domain Extensions (TDX), AMD’s SEV-SNP, and NVIDIA’s Compute Protected Regions (CPR) are the main technologies powering this today. They don’t just encrypt data-they enforce strict rules on who can access it and when.

Here’s the step-by-step flow:

- You send an encrypted prompt (like a question or medical note) over TLS 1.3.

- The request enters a secure hardware enclave on the server.

- Inside the enclave, your data is decrypted-but only within that locked memory space.

- The LLM runs its inference entirely inside the enclave. The model weights? Also encrypted outside, decrypted only inside.

- The response is encrypted again before it leaves the enclave.

- Only the final answer reaches you. Nothing else.

This isn’t just about hiding your data. It’s about protecting the AI itself. Companies like Anthropic and OpenAI spent billions training their models. If those weights leak, their competitive edge vanishes. Confidential computing lets them offer inference services without handing over the crown jewels.

Who’s Building This-and What’s Different?

Not all confidential computing setups are the same. The big cloud providers have taken different paths, each with trade-offs.

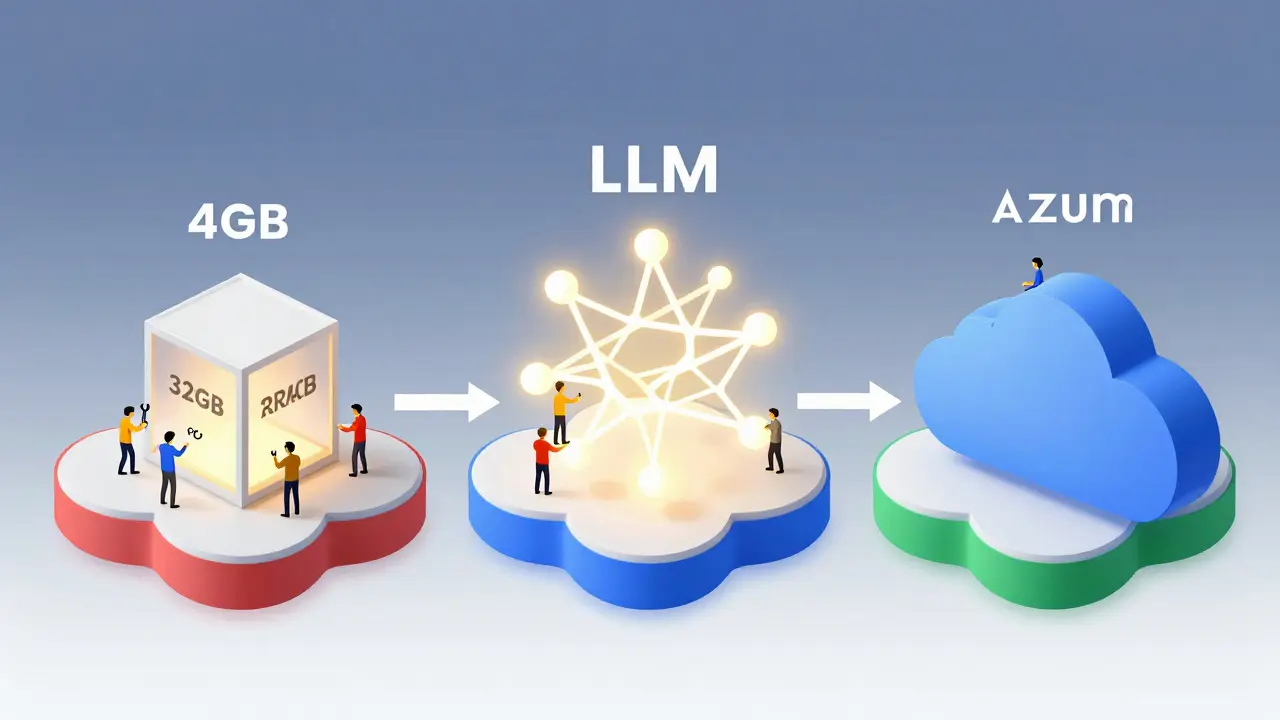

AWS Nitro Enclaves launched in 2020 and is the most mature for LLM workloads. It uses lightweight VMs that run alongside EC2 instances. But there’s a catch: each enclave is capped at 4GB of RAM and 2 vCPUs. That’s fine for small models, but if you’re running Llama 2-70B, you’re forced to quantize the model-cutting precision and losing up to 3.2% accuracy, according to users on Reddit. Still, Leidos used it successfully for government document analysis with 99.8% accuracy parity, thanks to custom tooling.

Microsoft Azure Confidential Inferencing leans on AMD’s SEV-SNP and supports up to 32GB RAM and 16 vCPUs per VM. That’s a big advantage for larger models. Microsoft also nailed integration with Azure Machine Learning, making it easier to deploy models without rewriting pipelines. Their biggest weakness? GPU support only arrived in Q1 2025. Before that, inference was CPU-bound-slow and expensive.

Google Cloud Confidential VMs uses Intel TDX and supports up to 224GB RAM and 56 vCPUs-the most memory of any platform. But until their October 2024 partnership with NVIDIA, they had no GPU acceleration. Now they’re catching up fast, targeting 95% performance parity with native inference by end of 2025.

Red Hat’s OpenShift sandboxed containers took a different approach. Instead of VMs, they built confidential computing into Kubernetes. This matters because most AI teams already run models in containers. Red Hat’s solution lets you deploy encrypted OCI images directly into secure pods-no VM overhead, no re-architecting. It’s the most developer-friendly option for teams already on Kubernetes.

And then there’s the academic route. Researchers at the University of Michigan introduced Secure Partitioned Decoding (SPD) and Prompt Obfuscation (PO)-software techniques that add cryptographic layers on top of TEEs. These help block side-channel attacks that try to infer data from timing or memory access patterns. But they’re not replacements for hardware. They’re supplements.

Performance, Cost, and Real-World Trade-Offs

Confidential computing isn’t free. You pay in performance.

On AWS and Azure, you’re looking at 5-15% overhead compared to non-confidential inference. NVIDIA’s Hopper and Blackwell GPUs, with their dedicated CPR hardware, get as close as 90-95% of native speed. That’s huge. But it only works if you’re using H100s or newer. Older GPUs? Not compatible.

Memory is the silent killer. Many TEEs limit how much RAM you can use inside the enclave. If your model is 30GB and your enclave only allows 16GB? You need to prune, quantize, or split the model. Each step eats accuracy. One healthcare client using Azure reported a 4.1% drop in diagnostic accuracy after quantizing a 13B-parameter model to fit inside the memory limit.

Latency matters too. The first request after a cold start can take 1.2-2.8 seconds longer because of attestation-the cryptographic handshake that proves the enclave is real and untampered. For chatbots or real-time applications, that’s a dealbreaker. Microsoft is targeting a 50% reduction in attestation time by Q3 2025. That’ll help.

And debugging? It’s brutal. You can’t just SSH into the machine. You can’t log everything. You’re working inside a black box. Developers report 30-50% longer development cycles because of the lack of visibility. Documentation varies wildly. Microsoft’s guides scored 4.3/5 on GitHub. AWS’s? 3.7/5-with users complaining, “Great theory, but where’s the LLM example code?”

Who’s Using This-and Why?

This isn’t for hobbyists. It’s for regulated industries.

- Healthcare: Hospitals use it to analyze patient notes, imaging reports, and genetic data under HIPAA. One U.S. hospital system reduced false positives in cancer screening by 19% using confidential LLMs-without ever exposing PHI to third-party AI vendors.

- Finance: Banks run credit scoring and fraud detection on encrypted transaction data. A European bank using Google Cloud cut GDPR violation risks by 89% and met their 150ms SLA after optimizing their pipeline.

- Government: Defense contractors analyze classified documents. Leidos used AWS Nitro Enclaves to process 2.4 million redacted files with zero data leaks.

Market data shows 47% of adoption is in financial services, 32% in healthcare, and 14% in government. Most deployments are in private or hybrid clouds-63% of the time-because organizations want control over where their data lives.

And it’s growing fast. IDC predicts 65% of enterprise AI in regulated sectors will use confidential computing by 2027. That’s an 87% annual growth rate. The global market for confidential computing hit $2.8 billion in 2024, with AI workloads making up $1.04 billion of that. By 2027, it’s projected to hit $14.3 billion.

What You Need to Get Started

If you’re considering this, here’s what you need to know before you start:

- Hardware check: Do you have Intel 4th Gen Sapphire Rapids, AMD Milan-X, or NVIDIA Hopper/Blackwell? If not, you’re out of luck. No software fix will help.

- Model size: Can your model fit in the TEE’s memory limits? If not, you’ll need to quantize or split it. Test accuracy loss early.

- Attestation setup: You need to configure the cryptographic verification system. This isn’t plug-and-play. You’ll need a security engineer who understands TPMs, certificates, and key management.

- Containerization: Convert your model into an encrypted OCI image. Red Hat and Microsoft offer tools for this. AWS requires custom scripting.

- Testing: Run both security and performance tests. Use tools like Open Enclave SDK to validate enclave integrity. Measure latency, throughput, and accuracy loss side by side with non-confidential runs.

Expect 3-6 months of effort for your first deployment, according to Forrester. And plan for 80-120 hours of training for your team. This isn’t a library you pip install. It’s a new infrastructure layer.

The Future: Standardization and Risks

The biggest hurdle today? Fragmentation. Every cloud provider uses different hardware, APIs, and tooling. The Confidential Computing Consortium is working on a universal attestation standard expected in Q2 2026. If it lands, you’ll be able to move your encrypted model from Azure to AWS without rewriting code.

But there’s a shadow. Side-channel attacks are getting smarter. Researchers have demonstrated 12 new ways to extract data from TEEs in the past 18 months. These aren’t theoretical. They’re real exploits. Hardware vendors are patching them, but it’s a cat-and-mouse game.

Some experts warn that we’re over-relying on hardware. Dr. Reetuparna Das from the University of Michigan says fully homomorphic encryption (FHE)-which lets you compute on encrypted data without decrypting-is the end goal. But FHE is 1000x slower than TEEs. Not viable for LLMs yet.

The consensus? Hybrid approaches are the future: TEEs for speed and isolation, plus lightweight cryptographic tricks like SPD and PO to block side channels.

By 2028, analysts predict confidential AI will be as standard as encryption at rest. The question isn’t if you’ll need it-it’s when you’ll be forced to adopt it. If you’re handling sensitive data and using LLMs, you’re already behind.

What’s the difference between confidential computing and regular encryption?

Regular encryption protects data at rest (on disk) and in transit (over networks). Confidential computing protects data in use-while it’s being processed. It uses hardware-based Trusted Execution Environments (TEEs) to create isolated memory zones where data is decrypted only inside a secure vault, invisible to the OS, cloud provider, or even administrators.

Can I use confidential computing with any LLM model?

Technically yes, but practically, no. Your model must fit within the memory limits of the TEE (often 4GB-32GB depending on the cloud provider). Large models like Llama 3-70B or GPT-4 may need to be quantized or split across multiple enclaves, which can reduce accuracy. Always test performance and accuracy loss before deploying.

Do I need special hardware to use confidential computing?

Yes. You need specific processors: Intel Xeon SP 4th Gen or newer (Sapphire Rapids), AMD EPYC Milan-X or newer, or NVIDIA Hopper/Blackwell GPUs. Older hardware doesn’t support the required Trusted Execution Environments. Software alone can’t enable it.

Is confidential computing secure against hackers?

It’s the most secure option available today for running LLMs on untrusted infrastructure. But it’s not perfect. Side-channel attacks-like analyzing power usage or memory timing-have been used to extract data from TEEs. Vendors patch these as they’re found, but new attacks emerge regularly. For maximum security, combine TEEs with cryptographic techniques like Prompt Obfuscation.

Why is Microsoft leading in market share for confidential AI?

Microsoft leads because Azure Confidential Inferencing is deeply integrated with Azure Machine Learning, making it easy to deploy and manage encrypted models without rewriting pipelines. They also offer higher memory limits and better GPU support than AWS Nitro Enclaves. Their tooling, documentation, and developer experience are more mature for enterprise AI teams.

What’s the biggest challenge when deploying confidential LLMs?

The biggest challenge is the combination of hardware limitations, complex attestation workflows, and lack of clear documentation. Most teams spend 3-6 months just getting the first model running. Memory caps force model quantization, which hurts accuracy. Debugging inside isolated enclaves is slow and frustrating. And finding engineers who know both AI and TEEs is rare.

- Jan, 21 2026

- Collin Pace

- 8

- Permalink

- Tags:

- confidential computing

- LLM inference

- privacy-preserving AI

- Trusted Execution Environment

- secure AI

Written by Collin Pace

View all posts by: Collin Pace