Cost Management for Large Language Models: Pricing Models and Token Budgets

Managing the cost of running large language models (LLMs) isn’t just a technical challenge-it’s a business imperative. A single poorly designed chatbot flow can burn through $500 in a week. A marketing team using GPT-4 to generate product descriptions without limits? That could cost $2,000 a month before anyone notices. The good news? You don’t need to cut features or slow down innovation. You just need to understand how LLMs charge, how to measure usage, and how to build guardrails that keep spending under control.

How LLMs Charge: The Token System Explained

Every time you ask an LLM a question, it doesn’t charge you for the whole sentence. It charges by tokens. A token is a chunk of text-usually a word, part of a word, or punctuation. In English, 1,000 tokens is roughly 750 words. That means a 100-word prompt and a 300-word response? That’s about 400 tokens total.

Providers like OpenAI, Anthropic, and Google don’t charge by the minute or by the request. They charge per token, split into input (what you send) and output (what the model returns). Here’s what it looks like in early 2026:

- GPT-4 Turbo: $10 per million input tokens, $30 per million output tokens

- Claude 3 Opus: $15 per million input, $75 per million output

- Gemini 1.5 Pro: $7 per million input, $21 per million output

Notice something? Output costs are usually 2-3x higher than input. That’s because generating text takes more computing power than reading it. If your app writes long summaries, responses, or code, output is where your bill spikes.

And it’s not just about the model. A simple GPT-3.5 Turbo prompt might cost $0.50 per million tokens. But if you switch to Claude 3 Opus for better reasoning? That jumps to $15 per million input tokens. The model you pick isn’t just about quality-it’s about cost.

Choosing the Right Model for Your Use Case

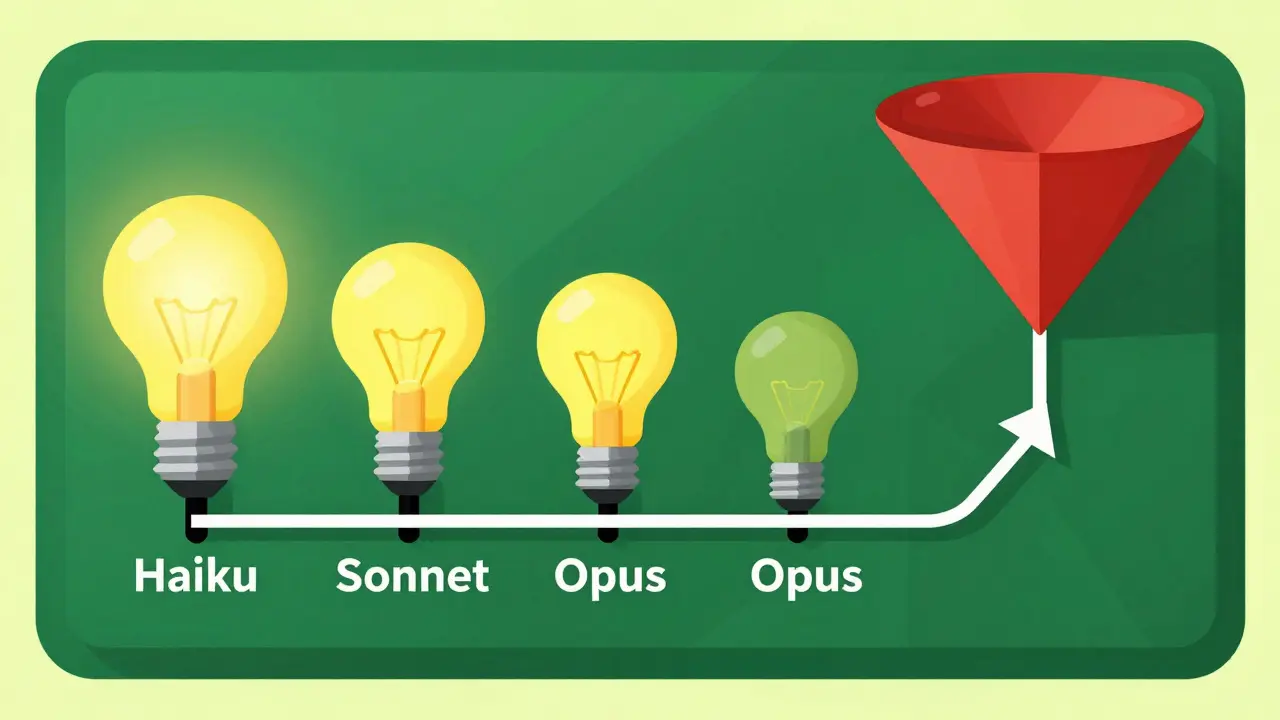

Not every task needs a premium model. Think of LLMs like cars: you don’t need a sports car to drive to the grocery store.

Here’s how most successful teams break it down:

- Entry-level (GPT-3.5 Turbo, Claude Haiku, Gemini 1.0): $0.50-$2.00 per million tokens. Perfect for simple chatbots, classification, spam filtering, or summarizing short text.

- Mid-tier (GPT-4, Claude Sonnet, Gemini 1.5): $3-$15 per million input tokens. Good for complex Q&A, content generation, and moderate reasoning.

- Premium (GPT-4 Turbo, Claude Opus, Gemini 1.5 Pro): $7-$15 per million input tokens. Only use for high-stakes tasks like legal analysis, medical summaries, or financial forecasting.

One Shopify merchant reduced their customer service costs by 75% by routing 90% of queries to Mixtral 8x7B (a cost-efficient open model) and only sending the hardest 10% to GPT-4. They kept 98% accuracy and cut their monthly bill from $4,200 to $1,050.

Don’t just pick the "best" model. Pick the right model for the job. Use benchmarks. Test outputs side by side. If Haiku gives you 90% of what Opus gives you at 1/10th the cost, stick with Haiku.

Token Budgets: The Key to Predictable Spending

Without limits, LLM usage grows like a weed. A team starts with 100 requests a day. Then 500. Then 5,000. Before you know it, your AI bill doubles overnight.

Token budgets fix that. They’re like monthly data plans for your AI.

Set hard limits per user, per feature, or per day. For example:

- Customer support bot: max 5,000 tokens per conversation

- Marketing content generator: max 20,000 tokens per day

- Internal research tool: max 100,000 tokens per user per week

When a request hits the limit, the system either:

- Truncates the output (and logs it)

- Falls back to a cheaper model

- Sends a polite message: "This request is too long. Try being more specific."

Companies using token budgets report 30-50% lower costs without sacrificing quality. Why? Because people start writing better prompts. They learn to be concise. They stop asking for 10-page reports when a bullet list will do.

Cost-Saving Tactics That Actually Work

There are dozens of tricks to cut LLM costs. Here are the ones that deliver real results:

- Use caching. If 100 users ask "What’s your return policy?", don’t run the prompt 100 times. Store the answer and reuse it. One healthcare startup cut costs by 47% just by caching common queries with 63% hit rates.

- Lower the temperature. Setting temperature to 0.2-0.5 reduces randomness and output length. Shorter responses = fewer tokens = lower cost. Moveworks found this cut costs by 15-20% with no quality drop.

- Use model cascading. Route simple requests to cheap models, complex ones to expensive ones. Ben Zhao of GetMonetizely says 65-75% of enterprise LLM traffic can go to cheaper models without losing user satisfaction.

- Optimize prompts. Avoid fluff. Don’t say "Please generate a detailed, well-structured, professional response..." Just say "Summarize this in 3 bullet points."

- Schedule non-urgent tasks. Many cloud providers offer off-peak discounts (10-15% off). Run data analysis or report generation overnight.

And don’t forget: token counters. Install a tool that shows you how many tokens each request uses. If you can’t see it, you can’t control it. Most developers use libraries like tiktoken (for OpenAI) or built-in SDKs that return token counts automatically.

When to Use Subscription or Per-Action Pricing

Token-based pricing works great for variable usage. But if you’re doing the same thing over and over, other models make more sense.

Subscription plans (offered by Anthropic, OpenAI, and others) give you a fixed monthly token allowance. For example:

- Anthropic Team plan: $25/month for 1 million tokens

- OpenAI Enterprise: $50,000/month for 2 billion tokens

These are great for teams with steady usage. If you’re sending 800,000 tokens a month, a $25 plan beats paying $12 in token fees every month.

Per-action pricing is for tasks with clear, repeatable outcomes. Harvey AI charges $15 per legal contract reviewed. BloombergGPT charges 3.2x more for financial analysis because it’s trained on market data. These models simplify budgeting for non-technical teams. But they usually cost 20-35% more than token-based equivalents. Use them only when the value is clear and the task is standardized.

What No One Tells You About Hidden Costs

Most people focus on the API cost. But the real killers are hidden.

- Prompt inflation. Poorly written prompts use 30-50% more tokens than needed. "Explain like I’m five" adds 20 tokens. "Summarize in one sentence" adds 5.

- Recursive loops. If your AI calls itself repeatedly (e.g., "summarize, then check if it’s accurate, then rewrite"), you’re running 5-10 times more tokens than you think. 31% of enterprise cost spikes come from this.

- Verbose errors. When a model fails, it often returns long error messages. These count as output tokens. One company found errors added 18% to their bill. Fix it by trimming error responses in code.

- Unused models. If you’re paying for GPT-4 but only using it 5% of the time, you’re wasting money. Audit your usage weekly.

Set up alerts. If your daily token usage jumps 50% from normal, get an email. Use tools like AWS LLM Cost Optimizer or Azure Cost Management for AI. They track usage in real time and flag anomalies.

What’s Next: The Future of LLM Pricing

Token costs have dropped 70% in the last year. That’s good news. But experts warn: cost-per-token isn’t enough.

Dr. Andrew Ng says the real metric is cost-per-value. A $0.10 prompt that generates a sales email that closes a $5,000 deal? That’s a win. A $0.50 prompt that writes a blog post nobody reads? That’s a loss.

By 2027, pricing will shift to three dimensions:

- Computational intensity (how hard the model works)

- Business outcome (revenue generated per request)

- Compliance needs (healthcare and finance models will cost more)

OpenAI already started testing "cost-per-quality" metrics. Anthropic offers SLA-backed pricing: pay $0.0025 extra per token for 99.99% uptime.

The goal isn’t to spend less. It’s to spend smarter.

Getting Started: Your 3-Step Plan

Don’t overcomplicate it. Here’s how to start controlling LLM costs today:

- Measure. Use your provider’s API to log tokens per request for one week. Find your top 3 cost drivers.

- Limit. Set token caps on every feature. Start with 5,000-10,000 tokens per interaction. Adjust based on output quality.

- Optimize. Replace one expensive model with a cheaper one. Add caching. Trim your prompts. Test. Repeat.

Within 30 days, you’ll likely cut your LLM bill by 25-40%. In 90 days, you’ll have a system that scales without surprises.

LLMs aren’t free. But they don’t have to be a budget killer either. With the right controls, they become a predictable, powerful tool.

How much does GPT-4 cost per 1,000 tokens?

GPT-4 Turbo costs $0.01 per 1,000 input tokens and $0.03 per 1,000 output tokens as of December 2025. That means a typical 200-word prompt and 300-word response (about 400 tokens total) would cost roughly $0.012. But if the model generates a 1,000-word output, the cost jumps to $0.03 for input and $0.03 for output-totaling $0.06.

Are open-source LLMs cheaper than commercial ones?

Yes-often by 30-50%. Models like Mixtral 8x7B or Llama 3 can run on your own servers or rented cloud instances using tools like vLLM. You pay for compute (e.g., $0.0005 per second on a GPU), not per token. For high-volume use cases, this can be far cheaper than paying $10-$15 per million tokens to OpenAI. But you need engineering resources to deploy and maintain them.

What’s the difference between input and output tokens?

Input tokens are the words you send to the model-your prompt, instructions, or context. Output tokens are the words the model generates in response. Output costs more because generating text requires more computation than reading it. A 100-word prompt (input) and a 500-word answer (output) will cost about 5x more than a 100-word prompt and a 100-word answer.

Can I use AI without spending money?

You can, but with limits. Free tiers from OpenAI, Anthropic, and Google offer 1,000-10,000 tokens per month-enough for testing or light use. For production apps, you’ll need paid plans. Open-source models like Llama 3 can be run locally on consumer hardware, but they require technical setup and may not match commercial model quality for complex tasks.

Why is my LLM bill spiking suddenly?

Common causes: 1) A new feature that generates long responses, 2) A loop where the AI calls itself repeatedly, 3) Poorly written prompts that use extra tokens, 4) Error messages adding to output, or 5) Someone on your team testing without limits. Check your usage logs. Set up alerts for 80% and 95% of your monthly budget to catch spikes early.

Should I use a subscription plan or pay per token?

If your team uses 500,000+ tokens per month consistently, a subscription plan saves money. For example, Anthropic’s $25 plan gives you 1 million tokens-cheaper than paying $5-$10 per month in usage fees. If usage is unpredictable or low (under 200,000 tokens/month), pay-per-token is better. Track your usage for a month first.

Do pricing models differ between cloud providers?

Yes. OpenAI charges per token with clear input/output splits. Anthropic offers monthly subscriptions and SLA-based pricing. Google’s Gemini pricing is simpler but less granular. AWS and Azure include LLM pricing in their broader AI cost tools, which helps if you’re already using their cloud. Compare total cost-not just per-token rates. Include things like caching support, API reliability, and monitoring tools.

How do I train my team to use LLMs more cost-effectively?

Start with a simple rule: "Shorter prompts = cheaper responses." Teach them to be specific. Use templates: "Summarize this in 3 bullet points." Avoid "Please write a detailed, professional, well-structured..."-that adds 20+ unnecessary tokens. Show them token counters. Reward teams that cut costs without losing quality. Make cost awareness part of your culture, not just IT’s problem.

Next steps: Audit your top three LLM use cases. Check your current token usage. Set a budget. Pick one optimization tactic to test this week. You’ll be surprised how quickly small changes add up.

- Jan, 23 2026

- Collin Pace

- 9

- Permalink

Written by Collin Pace

View all posts by: Collin Pace