Human Oversight in Generative AI: Review Workflows and Escalation Policies

Generative AI can write emails, draft reports, and even design marketing content in seconds. But if it gets something wrong-like inventing a fake customer quote or misrepresenting financial data-the cost can be huge. That’s why human oversight isn’t just a safety net. It’s the backbone of any responsible AI system. Without it, even the most advanced models become risky, unreliable, and legally dangerous.

Why Human Oversight Isn’t Optional

Companies that treat human oversight as an afterthought end up paying for it. A bank used an AI to auto-generate loan eligibility summaries. The model learned from biased historical data and started rejecting applicants from certain zip codes. No one caught it because no human was reviewing the outputs. By the time regulators stepped in, the bank faced a $2.3 million fine and a public trust crisis. This isn’t rare. According to BCG, organizations that skip structured human oversight fail to deliver real value from generative AI. Why? Because AI doesn’t understand context, ethics, or nuance. It predicts patterns. Humans interpret them. Human oversight fixes three core problems:- Accuracy: AI hallucinates. A human catches it.

- Compliance: Regulations like GDPR and the EU AI Act require human review for high-risk uses.

- Trust: Customers and regulators need to know someone is watching.

The Four Stages of a Review Workflow

A good review workflow doesn’t just react-it prevents problems before they happen. It’s built in four stages, each with clear human responsibilities.1. Input Validation

Before the AI even starts, someone checks the data going in. Garbage in, garbage out isn’t just a saying-it’s a legal risk. If your AI is trained on outdated customer records or unverified third-party data, it will generate misleading outputs. Example: A healthcare provider uses AI to summarize patient notes. Before processing, a data analyst checks that all personal identifiers are removed and that the source data is from approved electronic health record systems. If not, the input is blocked. This stage stops 30-40% of errors before they reach the AI.2. Processing Oversight

Real-time dashboards show how the AI is behaving as it works. Are prompts being repeated? Is it shifting tone? Is it ignoring key constraints? One SaaS company noticed their AI was rewriting customer support responses to sound overly cheerful-even when the customer was angry. A simple dashboard alert flagged the pattern. The team adjusted the prompt guardrails within hours. This isn’t about micromanaging. It’s about spotting drift. If the AI starts deviating from its intended purpose, humans need to see it fast.3. Output Review

This is where most organizations focus-and where most fail. Simply having someone click “approve” on every output is useless. That’s rubber-stamping, not oversight. Effective output review means:- Checking for factual accuracy

- Ensuring tone matches brand voice

- Verifying compliance with legal standards

- Flagging potentially harmful or biased language

4. Feedback Integration

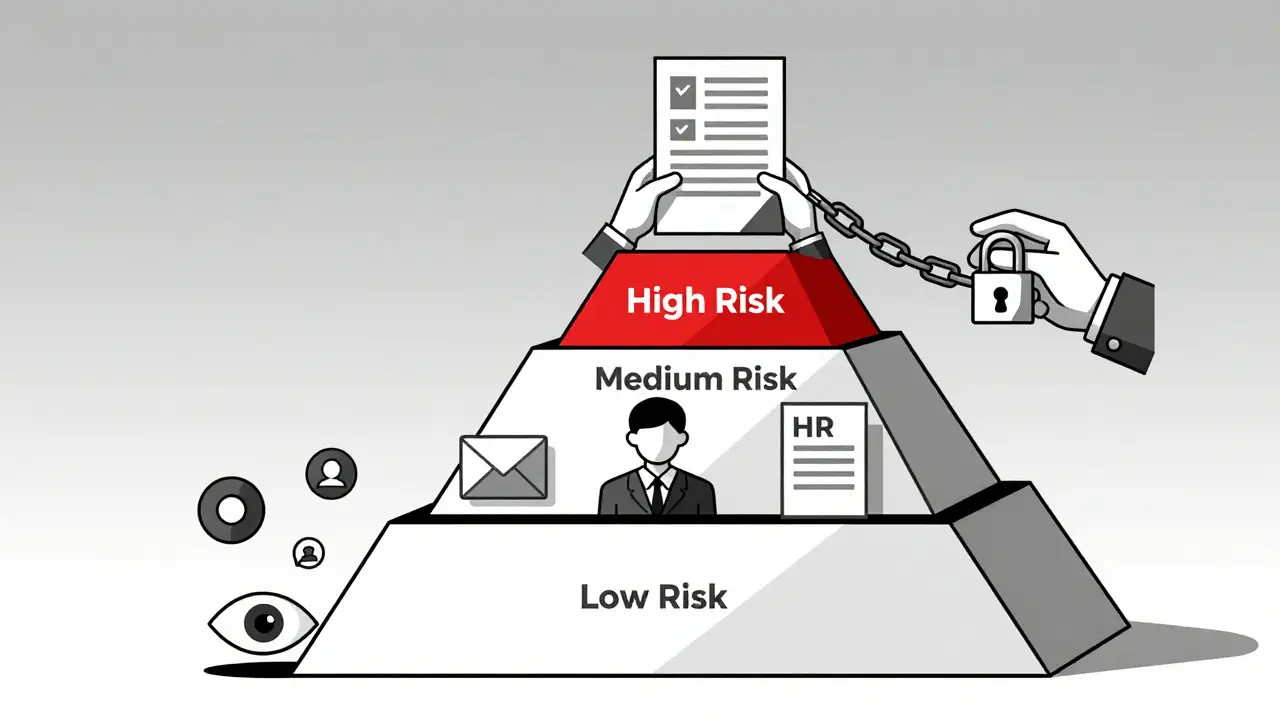

The best systems don’t just review-they learn. Every flagged output, every corrected draft, every user complaint becomes data. A retail company started logging why humans changed AI-generated product descriptions. After three months, they found that 62% of edits were fixing vague claims like “best on the market.” They updated the AI’s training data to require specific metrics (“20% faster than competitors”) and cut review time by half. Feedback loops turn oversight from a chore into a growth engine.Escalation Policies: Not All Outputs Need the Same Level of Review

Trying to review every single output is a recipe for burnout and wasted time. Instead, escalate based on risk. BCG calls this risk-differentiated oversight. Here’s how it works:| Risk Tier | Examples | Review Level |

|---|---|---|

| Low | Internal meeting notes, draft blog outlines, social media captions | Random 10% sampling |

| Medium | Customer emails, product summaries, HR policy drafts | 100% review by trained reviewer |

| High | Financial reports, legal documents, public press releases, medical summaries | 100% review + second approval + audit trail |

Who Does What? Team Roles in Oversight

Oversight isn’t one person’s job. It’s a team sport.- AI Quality Auditors: Review outputs, track error trends, update checklists.

- Compliance Officers: Ensure outputs meet legal standards (GDPR, HIPAA, etc.).

- Content Editors: Maintain brand voice and clarity.

- AI Developers: Adjust models based on feedback, fix training data gaps.

Audit Trails: The Paper Trail That Protects You

If regulators ask, “How did you ensure this AI didn’t discriminate?”-can you answer? An audit trail answers that. It records:- When an output was reviewed

- Who reviewed it

- What changes were made

- Why those changes were necessary

- Which version of the AI model was used

- Which training data was active

Common Pitfalls and How to Avoid Them

Pitfall 1: Automation Bias

Humans start trusting AI too much. They glance at outputs and hit “approve” without reading. This is called automation bias. Solution: Randomly audit reviewers. Give them an output with a clear error. If they miss it, they get trained-not fired. It’s a quality check for the quality checkers.Pitfall 2: Over-Reviewing

Reviewing every single output kills efficiency. You lose the speed advantage of AI. Solution: Use the risk tiers above. Let low-risk outputs fly. Focus your people where it matters.Pitfall 3: No Feedback Loop

If you review but never update the AI, you’re just putting out fires. Solution: Turn every review into a data point. Track common edits. Feed them back into training. Make the AI smarter over time.

When Oversight Is Non-Negotiable

Some uses of generative AI demand intense oversight. If you’re doing any of this, don’t skip the human layer:- Financial reporting or investment advice

- Customer service handling complaints or claims

- Medical summaries or treatment recommendations

- Public statements from leadership

- HR decisions involving hiring, promotions, or terminations

Best Practices in a Nutshell

- Start oversight at the design phase-not after deployment.

- Use tools that centralize review (like Magai or similar platforms).

- Define risk tiers and escalate accordingly.

- Train reviewers to question, not just approve.

- Document every change, every decision, every review.

- Include legal, compliance, and end-users in feedback loops.

- Never assume AI is neutral. Always assume it’s biased-and test for it.

Final Thought

Generative AI is powerful. But power without responsibility is dangerous. Human oversight isn’t about slowing things down. It’s about making sure you’re going in the right direction. The companies winning with AI aren’t the ones with the fanciest models. They’re the ones with the clearest review workflows, the strongest escalation policies, and the most engaged human teams. Your AI can write faster. But only you can make sure it’s right.Do I need human oversight for every AI output?

No. You should use risk-based escalation. Low-risk outputs like internal drafts or social media captions can be sampled randomly. High-risk outputs-like financial reports, legal documents, or customer service responses-require 100% human review and often a second approval. The goal is to balance efficiency with safety.

What if my team doesn’t know how to review AI outputs?

Train them. Start with simple checklists: Does this contain factual errors? Does it match our brand tone? Is it compliant? Use mock reviews with intentional errors to test their skills. Regular feedback sessions help build confidence. Many teams see a 50% improvement in review accuracy within four weeks of structured training.

Can AI review its own outputs?

No. AI can flag inconsistencies or match against rules, but it can’t judge context, ethics, or unintended harm. A human must make the final call. Tools that claim to self-audit are reducing workload, not replacing oversight.

How often should we update our oversight policies?

Every 3-6 months. AI models change. Regulations change. User needs change. Schedule quarterly reviews of your workflows. Look at error logs, feedback from reviewers, and new compliance requirements. If you haven’t updated your checklist in six months, you’re probably falling behind.

Is human oversight required by law?

Yes, in many cases. The EU AI Act requires human oversight for high-risk systems like those used in hiring, banking, or healthcare. The U.S. NIST AI Risk Management Framework also recommends it. Even without specific laws, regulators expect organizations to demonstrate accountability-human oversight is the clearest way to do that.

- Feb, 14 2026

- Collin Pace

- 1

- Permalink

Written by Collin Pace

View all posts by: Collin Pace