Long-Context AI: How Memory and Persistent State Are Changing AI in 2026

Imagine needing to process a 2-million-word document in seconds. Traditional AI couldn't do it. But in 2026, it's possible. This shift isn't just about bigger numbers-it's a fundamental change in how AI systems remember and use information across interactions. Long-Context Generative AI refers to AI systems that process and retain vast amounts of information across interactions, moving beyond traditional token limits.

What Broke the Context Wall?

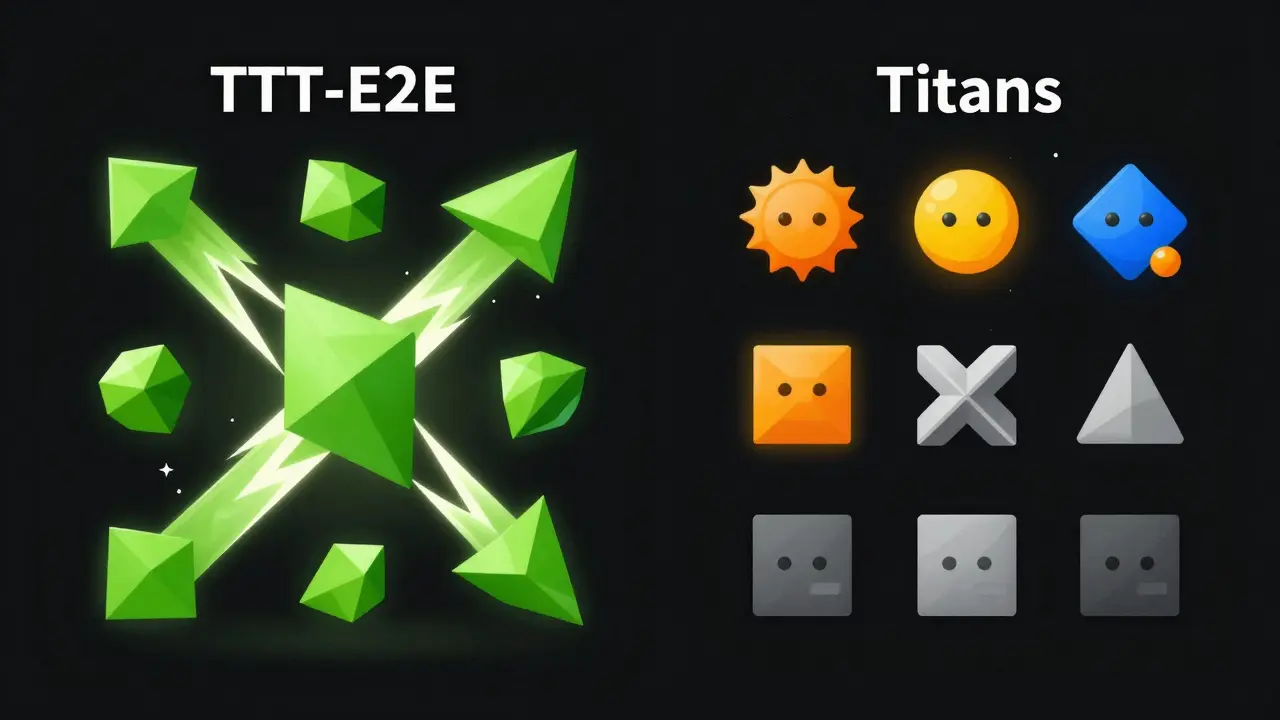

Before 2026, AI hit a hard wall with long contexts. Traditional transformers slowed down as context grew. Processing the 10-millionth token took a million times longer than the 10th. NVIDIA's February 2026 technical blog explained this clearly: linear computational cost growth made million-token contexts impossible. That's why enterprises struggled with legal contracts, medical records, or research papers longer than 128K tokens. But breakthroughs in 2026 changed everything. NVIDIA's TTT-E2E and Google's Titans architecture solved the 'context wall' problem, allowing real-time processing of 2 million tokens.

How TTT-E2E and Titans Work

NVIDIA's TTT-E2E treats input as training data during inference. Instead of recalculating attention for every token, it compresses context on the fly. On NVIDIA H100 GPUs, it's 2.7x faster for 128K tokens and 35x faster for 2 million tokens compared to older methods. Google's Titans take a different approach. They use a 'surprise' mechanism: low-surprise inputs (like 'dog' in a pet article) get minimal storage, while high-surprise data (like a financial chart in a medical report) triggers permanent storage. This selective memory system, combined with a multi-layer perceptron instead of fixed-size vectors, lets Titans handle over 2 million tokens while outperforming GPT-4 on complex reasoning tasks.

Real-World Impact Today

Enterprises are already using these systems. Legal teams process 500K-token contracts 83% faster with Titans. Healthcare providers analyze entire patient histories without missing details. Reddit user 'ML_Engineer_2025' reported: 'Titans reduced our document processing latency by 83% for 500K token legal contracts.' But challenges remain. 'DataArchitectPro' on Reddit complained about 'beginning-end bias causing missed middle-section details in 200K token technical documents.' This phenomenon, documented by VirtusLab on February 3, 2026, shows models still struggle to recall middle sections of extremely long contexts. Infrastructure is another hurdle. WEKA's analysis shows new hardware requirements: BlueField-4 DPUs, NVMe storage, and RDMA-connected networks. Without these, the memory system stalls.

Why This Matters for Enterprises

67% of organizations now prioritize 'long-context memory capabilities' when selecting AI platforms, up from 22% in Q3 2025. Legal and healthcare lead adoption at 78% and 65% enterprise usage respectively. This isn't just about handling longer documents-it's about building truly agentic AI systems. G2 Crowd's January 2026 review data shows context management features now influence 42% of purchasing decisions. WEKA's Augmented Memory Grid received 4.7/5 stars from 87 enterprise reviewers specifically praising its 'persistent, reusable KV Cache' implementation. The industry is moving from the Prompt Era to the Context Era, as WEKA's CTO Liran Zvibel declared in January 2026.

What's Next in 2026 and Beyond

NVIDIA plans to integrate TTT-E2E into Blackwell Ultra GPUs by late 2026. Google's Titans 2.0, coming in June 2026, will enable cross-agent memory sharing. Forrester predicts context windows will exceed 10 million tokens by 2027. The enterprise AI market is projected to reach $1.2 trillion by 2026, with context management infrastructure representing $276 billion of that. But challenges persist. NVIDIA researchers caution that 'scaling trends require continuous validation beyond 2M tokens,' while Google acknowledges 'surprise mechanism calibration remains sensitive to domain-specific expectations.' Despite this, the consensus is clear: solving long-context memory is the essential foundation for practical agentic AI systems.

Frequently Asked Questions

What is the context wall problem?

The context wall problem refers to the exponential slowdown AI models experience as context length increases. Before 2026, processing the 10-millionth token could take a million times longer than the 10th token due to linear computational cost growth. This made handling documents beyond 128K tokens impractical for most applications.

How does NVIDIA's TTT-E2E solve this?

TTT-E2E treats input as training data during inference. Instead of recalculating attention for every token, it compresses context on the fly. This maintains constant latency regardless of context length. On NVIDIA H100 GPUs, it processes 128K tokens 2.7x faster and 2 million tokens 35x faster than previous methods.

What's unique about Google's Titans architecture?

Titans uses a 'surprise' mechanism to decide what to store in long-term memory. Low-surprise inputs (like 'dog' in a pet article) get minimal storage, while high-surprise data (like a financial chart in a medical report) triggers permanent storage. This selective approach, combined with a multi-layer perceptron memory module, allows Titans to handle over 2 million tokens while outperforming larger models like GPT-4 on complex reasoning tasks.

Why do enterprises care about long-context AI?

67% of organizations now prioritize long-context memory capabilities when selecting AI platforms. Legal and healthcare sectors lead adoption at 78% and 65% usage respectively. This isn't just about handling longer documents-it's about building truly agentic AI systems that can understand and act on massive amounts of contextual information across interactions.

What infrastructure is needed for long-context AI?

WEKA's analysis shows new hardware requirements: BlueField-4 DPUs for context memory acceleration, minimum 400GB/s interconnect speeds for context sharing, and NVMe storage tiers optimized for KV cache patterns. Without these, the memory system stalls due to slow storage or insufficient GPU memory capacity.

- Feb, 6 2026

- Collin Pace

- 2

- Permalink

Written by Collin Pace

View all posts by: Collin Pace