Model Compression Economics: How Quantization and Distillation Cut LLM Costs by 90%

Large language models are expensive. Not just in training, but in running them. A single query to a 70-billion-parameter model can cost more than a dollar. For businesses scaling chatbots, customer service tools, or internal AI assistants, those costs add up fast. The good news? You don’t need to keep running massive models to get good results. Model compression - specifically quantization and knowledge distillation - is turning expensive LLMs into lean, affordable tools that run on basic hardware.

Why Your LLM is Burning Cash

Most LLMs today run on 32-bit floating-point precision. That means every weight in the model is stored as a number with decimal places, using 32 bits of memory. Sounds precise? It is. But it’s also wildly inefficient. A 7B model like Llama-2 takes up 14 GB of RAM just to load. On a cloud GPU, that means you’re paying for power, memory, and compute time - all for numbers you don’t really need to be that exact. The math is simple: if you can cut model size by 8x, you cut your inference cost by 8x. That’s not theory - it’s what companies like a fintech startup in Austin did. They switched from running Llama-2 7B in full precision to an 8-bit quantized version, and their cost per 1,000 queries dropped from $1.20 to $0.07. That’s a 94% reduction. No change in users. No drop in response quality. Just smarter math.Quantization: Shrinking the Model Without Retraining

Quantization is the easiest way to start cutting costs. It doesn’t require retraining. You just convert those 32-bit weights into smaller numbers - like 8-bit integers (INT8), 4-bit (INT4), or even 2-bit. Think of it like reducing a high-resolution photo to a lower quality JPEG. You lose some detail, but the image still works. Here’s what happens in practice:- FP32 → INT8: 4x smaller model. Accuracy drops less than 1%. Perfect for real-time chatbots.

- FP32 → INT4: 8x smaller. Accuracy drops 2-5%. Still fine for most tasks.

- FP32 → INT2: 16x smaller. Accuracy drops 10-15%. Only use if you’re desperate for space.

Distillation: Training a Tiny Model to Think Like a Giant

Quantization shrinks the model. Distillation replaces it. Knowledge distillation trains a small “student” model to mimic a large “teacher” model. The student doesn’t learn from raw data. It learns from the teacher’s outputs - the probabilities, the confidence levels, the hidden patterns. It’s like a student studying not just the right answers, but how an expert thinks. Amazon’s 2022 research showed a distilled BART model could be 1/28th the size of the original and still hit 97% of its question-answering accuracy. That’s not just compression - it’s a complete cost reset. You’re no longer paying for a 70B model. You’re paying for a 2.5B model that behaves almost identically. But here’s the hard part: distillation is expensive to train. Team et al. (2024) needed 8 trillion tokens to train a distilled version of Gemma-2 9B. That’s the same amount of compute as pretraining the original model. You need a powerful GPU cluster, high-quality data, and time. Most startups can’t afford that upfront cost. That’s why distillation works best for specialized tasks:- Creating a medical chatbot from a general-purpose LLM

- Building a legal document analyzer from a large legal-trained model

- Turning a multilingual model into a single-language specialist

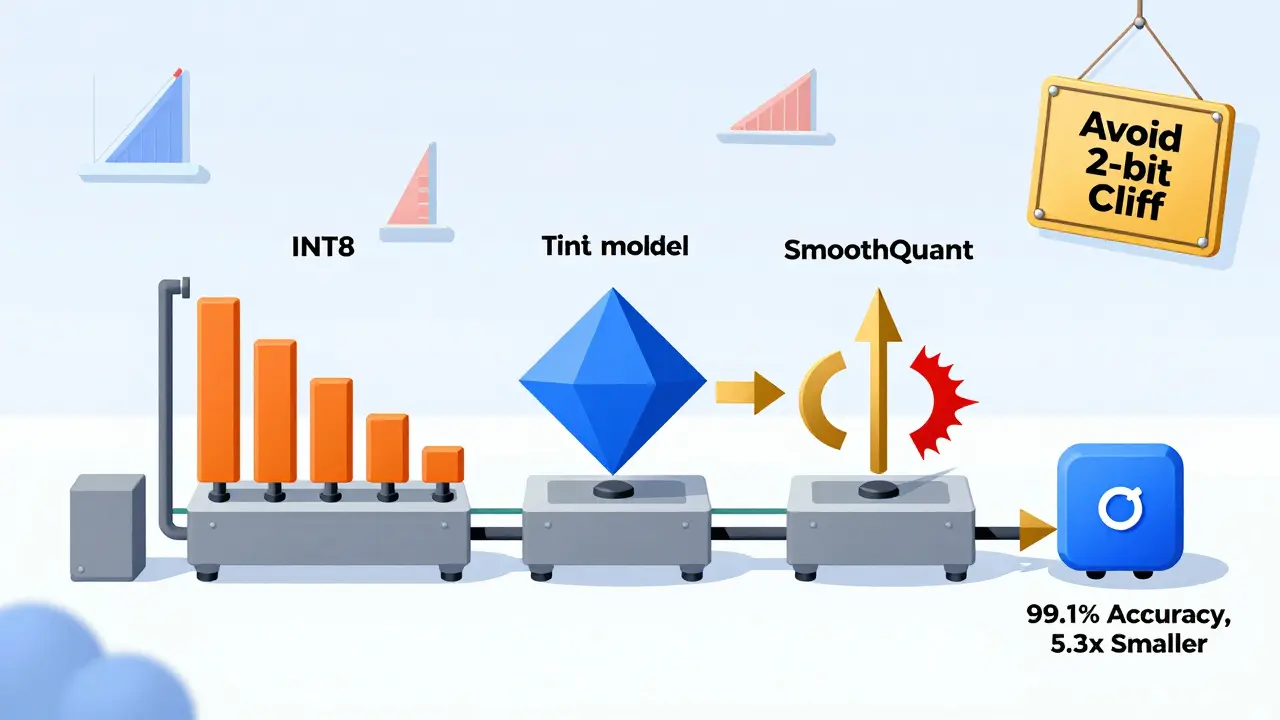

Hybrid Compression: The Real Winner

The best results don’t come from quantization or distillation alone. They come from using both. Amazon’s team combined distillation with 4-bit quantization and got a model that was 95% smaller than the original - with no loss in accuracy on long-form Q&A. Google’s 2024 Gemma-2 used distillation-aware quantization to reduce size by 5.3x while keeping 99.1% of performance. And a new method called BitDistiller (Team et al., 2024) combined self-distillation with quantization to boost 2-bit model accuracy by 7.3%. This is the new standard: start with distillation to shrink the model, then apply quantization to shrink it again. You get the best of both:- Distillation removes redundancy in the architecture

- Quantization removes redundancy in the numbers

Where It Falls Apart

Compression isn’t magic. Push too far, and the model breaks. At 2-bit precision, Stanford’s CRFM found models failed on complex reasoning tasks - especially those involving numbers, logic, or rare words. Accuracy dropped by 18.5% on low-frequency vocabulary. That’s not acceptable for financial analysis, medical diagnosis, or legal reasoning. Also, not all hardware supports low-precision math. Older CPUs, ARM chips in budget phones, or outdated cloud instances can’t run INT4 efficiently. You’ll see no speed gain - just lower accuracy. And distillation? It’s a black box. If your student model doesn’t learn the right patterns, it’ll hallucinate or miss context. Hugging Face users reported 41% struggled to replicate teacher performance in models under 1B parameters. The rule? Don’t go below 4-bit unless you’ve tested it on your exact use case. And never skip calibration. Use real user data to fine-tune quantization ranges. Don’t guess.

What You Should Do Today

If you’re running LLMs and paying more than $0.10 per 1,000 queries, you’re overpaying. Start here:- Use 8-bit quantization with NVIDIA TensorRT-LLM or Hugging Face Optimum. Test it on your data. If accuracy drops under 1%, you’re done.

- If you need more savings, try distillation - but only for a narrow task. Use a teacher model you already have. Train the student on your real user prompts.

- Combine both. Quantize the distilled model. You’ll get 10x-20x size reduction with minimal loss.

- Always test on your actual workload. Don’t rely on benchmarks. Run 1,000 real queries and measure error rates.

- Use SmoothQuant if you’re pushing to 4-bit. It’s not optional anymore - it’s the baseline.

Market Reality: Compression Is No Longer Optional

Gartner predicts the model compression market will hit $4.7 billion by 2026. Why? Because enterprises are waking up to the cost of scale. ABI Research found 68% of IoT companies now use compression - not because they want to, but because they have to. NVIDIA dominates with TensorRT-LLM. Hugging Face leads with Optimum. But the real winners? The teams who stopped thinking about models as fixed objects and started treating them as adjustable systems. The EU AI Act now requires transparency on compression in high-risk applications. That means if you’re using a compressed model in healthcare or finance, you’ll need to prove it’s reliable. That’s not a burden - it’s a signal. The industry is maturing. Compression isn’t a hack anymore. It’s the standard.Final Thought: Smaller Isn’t Weaker - It’s Smarter

The biggest mistake companies make is thinking bigger models are better. They’re not. They’re just more expensive. A 7B model distilled and quantized to 4-bit can outperform a full-size 70B model on specific tasks - and cost 1/20th as much to run. The future of AI isn’t about scaling up. It’s about scaling smart. If you’re still running full-precision LLMs in production, you’re not being innovative. You’re being wasteful. Start compressing. Test. Measure. Cut costs. Then do it again.Is quantization safe for production use?

Yes - but only at 8-bit or higher. 8-bit quantization is now standard in production. Companies like Google, Meta, and Amazon use it in mobile apps and cloud services. Accuracy loss is typically under 1%, and speed gains are 3-4x. Avoid 2-bit or 3-bit unless you’ve tested it on your exact use case. Always calibrate with real data.

Can I distill a model on my laptop?

Not really. Distillation requires massive compute - often equivalent to pretraining the original model. You need a cluster of high-end GPUs and weeks of training time. But you can use pre-distilled models from Hugging Face, like TinyLlama or Gemma-2-it. These are already compressed and ready to deploy.

What’s the difference between pruning and quantization?

Pruning removes entire neurons or connections that don’t contribute much to output. It cuts the model’s structure. Quantization reduces the precision of the remaining weights - it makes the numbers smaller. Pruning gives you 2-5x size reduction. Quantization gives you 4-8x. They’re often used together: prune first, then quantize.

Do I need special hardware for quantization?

For best results, yes. Modern GPUs (NVIDIA Ampere or newer) and Apple M-series chips support INT8/INT4 natively. Older CPUs and low-end cloud instances won’t speed up much - you’ll just get smaller models with no performance gain. If you’re on AWS or Azure, check if your instance type supports Tensor Cores or INT8 inference.

Which tools should I use to compress my LLM?

For quantization: Use NVIDIA’s TensorRT-LLM or Hugging Face Optimum with bitsandbytes. For distillation: Try Hugging Face’s Transformers library with the DistilBERT or TinyLlama templates. For automated pipelines, check out OctoML or Microsoft’s upcoming Adaptive Compression Engine. Start with Optimum - it’s free, well-documented, and works with most models.

Is model compression legal under new AI regulations?

Yes - but you must disclose it. The EU AI Act and similar frameworks require transparency about modifications that affect reliability. If you compress a model used in healthcare or finance, you need to document the technique, the accuracy loss, and how you validated performance. Compression itself isn’t banned - hiding it is.

- Dec, 29 2025

- Collin Pace

- 6

- Permalink

Written by Collin Pace

View all posts by: Collin Pace