Model Lifecycle Management: Versioning, Deprecation, and Sunset Policies Explained

Every AI model you deploy today will eventually break. Not because it’s flawed, but because the world around it changes. Data shifts. Regulations tighten. Business needs evolve. Without a clear plan for versioning, deprecation, and sunset policies, your AI systems become liabilities-not assets.

Why Model Lifecycle Management Isn’t Optional Anymore

In 2019, only 10% of enterprises used AI models in production. By 2024, that number jumped to 37%. But here’s the catch: 53% of those companies had no formal way to manage those models. They didn’t track which version was live. They didn’t know when to retire old ones. And when things went wrong, they spent weeks digging through logs trying to figure out what changed. This isn’t just a technical problem. It’s a legal one. In healthcare, the FDA requires diagnostic AI models to roll back to a known-good version within 15 minutes if performance drops. In finance, FINRA Rule 4511 demands seven-year audit trails for fraud detection models. If you can’t prove which version was running when a decision was made, you’re not just at risk of a system outage-you’re at risk of fines, lawsuits, or worse. Model Lifecycle Management (MLM) fixes this. It’s not about fancy tools. It’s about discipline. Versioning tells you what changed. Deprecation tells you when to stop using it. Sunset tells you when to turn it off for good.Versioning: The Foundation of Trust

Versioning sounds simple: save a copy when you update the model. But in AI, it’s not just about the code. It’s about everything that went into making that model work. A real ML version includes:- The exact training data used (including timestamps and checksums)

- The hyperparameters (learning rate, batch size, etc.)

- The model weights and architecture

- The environment it was trained in (Python version, libraries, GPU type)

- The performance metrics (accuracy, precision, recall, confidence intervals)

- Who approved it and why

Deprecation: When to Stop Using a Model

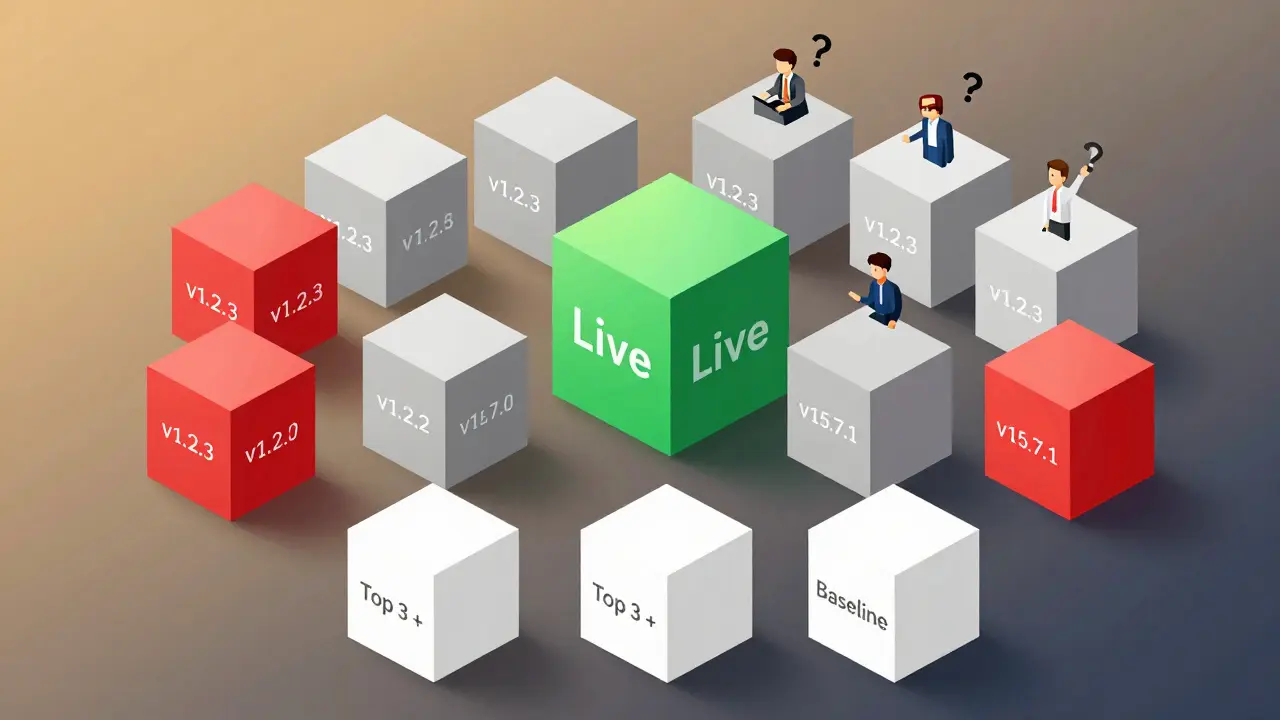

Not every model needs to live forever. But you can’t just delete it. You need a plan. Deprecation means: “This version is no longer recommended. Use the next one instead.” It’s not a shutdown. It’s a transition. The biggest mistake companies make? Letting old versions run indefinitely. GitHub’s open-source model registry lets teams keep every version forever. That sounds safe-until you have 14.7 versions per model in production. Now you’ve got version sprawl. Developers can’t tell which one is live. Monitoring tools get confused. Testing becomes impossible. Enterprise-grade deprecation policies fix this. They enforce time limits:- Non-production models: retired after 180 days

- Low-risk models (e.g., recommendation engines): sunset in 365 days

- High-risk models (e.g., credit scoring, medical diagnosis): sunset in 90 days

Sunset: Turning Off the Lights for Good

Deprecation says, “Stop using this.” Sunset says, “This is gone. Forever.” Sunset policies are legally binding. Once a model hits its sunset date, it’s automatically taken offline. No exceptions. No “we’ll keep it running just in case.” Why? Because compliance isn’t optional. The EU AI Act, FDA SaMD guidelines, and upcoming NIST AI 100-4 standards all require automated sunset workflows. If you can’t prove a model was retired on time, you’re in violation. Leading platforms now integrate sunset directly into the model registry. AWS Model Registry, for example, lets you set a sunset date during registration. At that time, it automatically shifts traffic to the replacement model. No one has to remember. No one has to click a button. But here’s what most people miss: sunset isn’t just about turning off a model. It’s about preserving its history. Even after sunset, the model’s metadata-its training data, metrics, approvals-must stay archived. Why? Because if a customer sues over a decision made two years ago, you need to show exactly what model made it.How to Build a Real MLM System

You don’t need to buy a $100K platform to start. But you do need structure. Here’s how to build a working system in 8-12 weeks:- Start with semantic versioning (SemVer-ML): Use

MAJOR.MINOR.PATCHbut define what each means. MAJOR = data or logic changed. MINOR = performance improved without breaking changes. PATCH = bug fix. - Store everything in immutable storage: Use SHA-256 checksums for data and model artifacts. Once saved, never overwrite. Ever.

- Link versions to approvals: Require at least two people to sign off before a model goes live. Record who, when, and why.

- Set deprecation rules by risk level: High-risk = 90 days. Medium = 180 days. Low = 365 days.

- Automate sunset: Use tools that auto-disable models on their sunset date. No exceptions.

- Archive everything: Even retired models need a digital tombstone with full metadata.

What Happens When You Skip This

The consequences aren’t theoretical. UnitedHealth’s 2022 incident? A biased model kept running because no one knew which version was live. It denied care to 2.3 million patients. The HHS fined them $12 million. The root cause? No version tracking. No deprecation policy. No sunset plan. Or take a smaller case: a fintech startup used MLflow to track models. They had 87 versions of their fraud detection model. No one knew which one was in production. When fraud rates spiked, they spent three weeks tracing it back to a data version mismatch. Lost $2.1M in revenue. Lost customer trust. These aren’t edge cases. They’re symptoms of ignoring MLM.Where the Industry Is Headed

The market for MLM tools is growing fast-$3.2 billion in 2023, projected to hit $14.7 billion by 2028. Why? Because regulators are forcing change. The EU AI Act, FDA SaMD rules, and NIST’s upcoming AI 100-4 guidelines will make versioning, deprecation, and sunset mandatory for any company doing business in regulated industries. By 2027, 92% of analysts predict these will be non-negotiable. Even outside regulation, the business case is clear. Organizations with strong MLM practices see 3.2x higher ROI on AI. They have 37% fewer production incidents. They recover from failures 28% faster. The future belongs to teams that treat AI like a product-not a prototype. That means treating every model like it’s going to be audited, scrutinized, and held accountable.FAQ

What’s the difference between deprecation and sunset in AI models?

Deprecation means a model is no longer recommended and should be replaced-but it’s still running. Sunset means the model is permanently turned off and can no longer be used. Deprecation is a warning. Sunset is an enforcement.

Can I use open-source tools like MLflow for model lifecycle management?

Yes, but only for small teams or low-risk models. MLflow covers basic versioning but lacks automated deprecation, sunset workflows, audit trails, and full lineage tracking. In regulated industries like finance or healthcare, it’s not enough. Enterprise platforms like ModelOp or Domino Data Lab are built for compliance and automation.

How often should I update my AI models?

There’s no fixed schedule. Update when performance drops, data drifts, or regulations change. High-risk models (fraud, medical diagnosis) may need monthly updates. Low-risk models (recommendations, chatbots) can go months or years. The key is monitoring-not calendar-based updates.

Do I need to keep old model versions forever?

No. But you must keep their metadata-training data, metrics, approvals-forever for audits. The actual model files can be deleted after sunset. Archive the story, not the binary. Most companies keep only the top 3 performing versions plus the baseline to reduce storage costs.

What’s the biggest mistake companies make with model lifecycle management?

Treating versioning as an afterthought. Many teams build the model, deploy it, and forget about it. When it breaks, they panic. The best teams build versioning, deprecation, and sunset into the model’s design-from day one. It’s not a feature. It’s a requirement.

How do I convince my team to adopt formal MLM practices?

Show them the cost of failure. Use real examples: UnitedHealth’s $12M fine, the 3-week debugging nightmare, the $2M revenue loss. Frame it as risk reduction, not just tech improvement. And start small: pick one high-risk model, apply full versioning and sunset, and measure how much faster you recover from issues.

- Sep, 24 2025

- Collin Pace

- 0

- Permalink

Written by Collin Pace

View all posts by: Collin Pace