Prompt Metrics for Generative AI: How to Measure Clarity, Coverage, and Compliance

Most people think that if you ask an AI a question, it will give you a good answer. But that’s not true. Ask the same question three different ways, and you’ll get three wildly different results. One might be vague. Another might be wrong. The third could be dangerously off-topic. Why? Because prompt design isn’t just about wording-it’s about structure, intent, and measurement.

If you’re using generative AI at work, you’re already relying on prompts. But are you measuring them? Most teams aren’t. They guess. They tweak. They hope. And that’s why so many AI projects fail before they even start. The truth is, you can’t improve what you don’t measure. That’s where clarity, coverage, and compliance come in.

Clarity: What Your Prompt Actually Says

Clarity isn’t about being fancy. It’s about being precise. A prompt like "Write a report" tells the AI nothing. But "Write a 500-word summary of Q1 sales trends for the Midwest region, using only data from our internal CRM, in a formal tone for executives"? That’s clear.

Research shows that small changes in wording can swing accuracy by up to 76%. One study tested the same question with three different structures: open-ended, structured with bullet points, and constrained with format rules. The version with structure got answers that were 68% more accurate. Why? Because AI doesn’t read between the lines. It follows instructions-literally.

Every good prompt has four parts:

- Request: What you want done

- Framing context: Who the audience is, what tone to use, what assumptions to make

- Format specification: Bullet points? Table? Paragraphs? Word count?

- References: "Use only this dataset," "Don’t cite external sources," "Refer to our last response"

If any of those are missing, the AI fills in the gaps-and it usually guesses wrong. A vague request leads to vague answers. No format? You get a wall of text when you needed a chart. No context? The AI might invent facts. That’s not creativity. That’s risk.

Coverage: What You Didn’t Say But Should Have

Think of your prompt like an iceberg. The part above water is your actual question. Below the surface? All the hidden context: the real goal, the unspoken constraints, the stakeholders involved, the consequences of a bad answer.

Most prompts only show the tip. But the real work happens under the water. For example, if you ask an AI to "draft an email to a client," you might get a polite, generic reply. But what if the client is angry? What if this email could trigger a legal review? What if your company’s tone policy requires a specific apology structure? If you don’t include that, the AI won’t know it matters.

This is where the PROMPT framework helps:

- Purpose: Why are you asking this?

- Requirements: What must be included or excluded?

- Output: What format, length, style?

- Metrics: How will you judge if it’s good?

- Testing: Have you tried it with real users or edge cases?

Teams that use this framework see a 40% drop in rework. Why? Because they’re not just asking for an answer-they’re defining success.

Another example: A marketing team asked AI to "generate ad copy for a new product." They got 10 variations. All were well-written. None mentioned the product’s key feature-the 24/7 customer support. Why? Because the prompt didn’t say it. The team hadn’t even realized they’d left it out. Once they added it, conversions went up 18%.

Compliance: Does the AI Do What It’s Told?

Clarity and coverage mean nothing if the AI ignores them. That’s where compliance kicks in.

Compliance means the AI follows your rules. Not just the words-but the spirit. If you say "use only this data," does it? If you say "no jargon," does it? If you say "keep it under 300 words," does it?

Google Cloud’s Vertex AI and Microsoft’s Azure both track this with a metric called instruction following. It’s not about whether the answer is smart-it’s about whether it listened. A response can be brilliant, but if it ignores your format or skips your constraints, it’s useless.

Here’s how to test it:

- Ask for a list. Does it give you a list-or a paragraph?

- Ask for a summary. Does it repeat everything? Or cut to the point?

- Ask to avoid a word. Does it slip it in anyway?

- Ask for a tone. Is it casual? Professional? Sarcastic? Or just robotic?

Compliance also includes safety. If your prompt asks for advice on a sensitive topic-like medical treatment or legal action-does the AI refuse to give harmful advice? Or does it guess anyway? That’s not just a quality issue. It’s a legal one.

Organizations that track compliance see fewer errors, fewer escalations, and less liability. One healthcare provider started measuring compliance after an AI suggested an unapproved treatment. They added three new rules to every prompt: "Cite only peer-reviewed sources," "Do not recommend off-label treatments," and "If uncertain, say so." Within two months, risky outputs dropped by 92%.

Putting It All Together: A Real-World Workflow

Here’s how a team in Madison uses these metrics daily:

- Write: Draft the prompt using the four-part structure.

- Test: Run it 3-5 times. Look for variation.

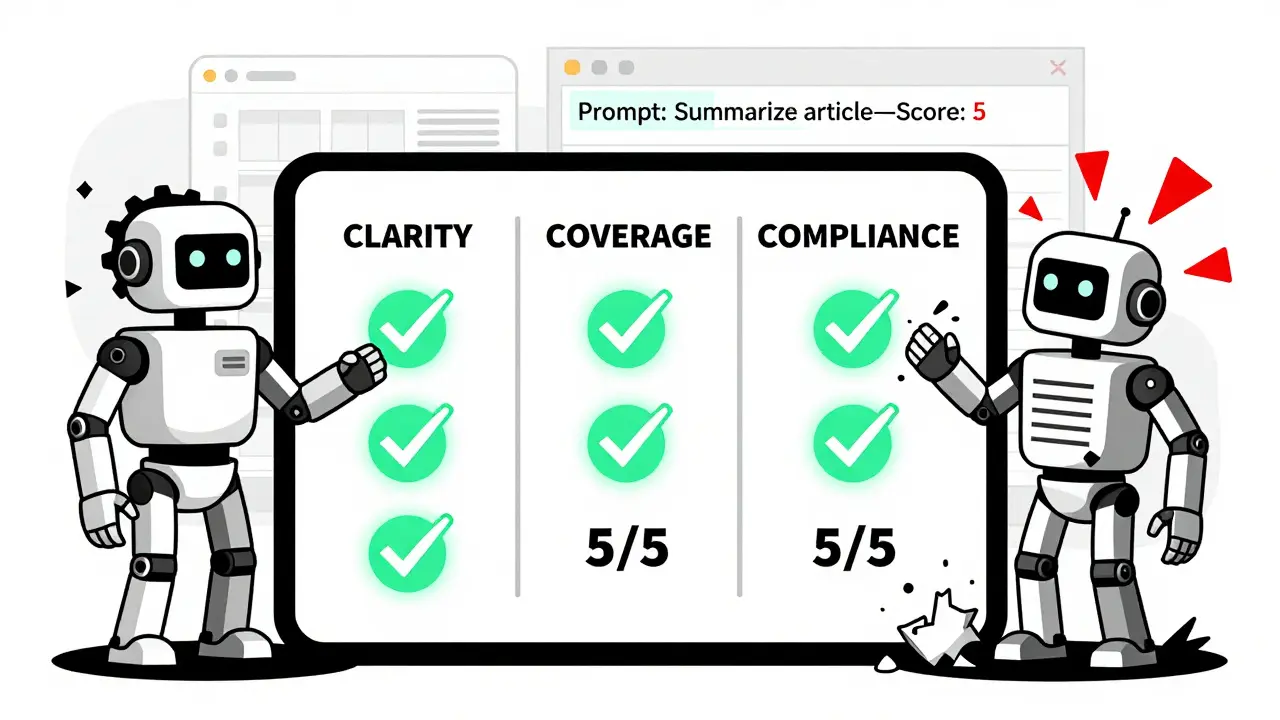

- Measure: Score each output on clarity (did it understand?), coverage (did it answer everything?), and compliance (did it follow rules?).

- Refine: Tweak the prompt. Add context. Tighten constraints.

- Automate: Save the best version as a template. Use it for every similar task.

They use a simple spreadsheet:

| Prompt | Clarity (1-5) | Coverage (1-5) | Compliance (1-5) | Score |

|---|---|---|---|---|

| "Summarize this article" | 2 | 1 | 3 | 2 |

| "Summarize the article in 150 words. Focus on the funding round. Use bullet points. Do not mention competitors." | 5 | 5 | 5 | 5 |

They set a threshold: any prompt scoring below 3.5 gets rewritten. No exceptions.

Why This Matters More Than You Think

This isn’t about making AI smarter. It’s about making your team smarter.

Companies that measure prompt quality see:

- 30% faster task completion

- 50% fewer revisions

- 65% higher user satisfaction

- 40% reduction in legal or reputational risk

And the opposite is true. Poor prompts mean wasted time, bad decisions, and damaged trust. One financial firm lost $2.3 million in client deals because AI-generated reports contained outdated tax rules. The prompt didn’t specify a cutoff date. They didn’t measure compliance. They assumed.

There’s no such thing as a "good" AI. There’s only a good prompt.

Start Measuring Today

You don’t need fancy tools. You don’t need engineers. You just need to start asking three questions before every AI request:

- Is it clear? Could someone else read this and know exactly what to do?

- Is it complete? Have I included all the context, constraints, and references?

- Will it be followed? Does the AI have clear rules to obey-and will it obey them?

Write it down. Test it. Score it. Improve it. Repeat.

Because the future of AI isn’t about better models. It’s about better prompts. And if you’re not measuring them, you’re flying blind.

What’s the difference between prompt clarity and prompt coverage?

Clarity is about how clearly your prompt communicates your request. If your prompt is vague, the AI guesses-and usually gets it wrong. Coverage is about whether your prompt includes all the hidden context the AI needs to give a complete answer. A clear prompt might say "Write a report," but a well-covered prompt adds who it’s for, what data to use, what tone, and what to leave out. Clarity gets you the right format. Coverage gets you the right answer.

Can AI models be trained to follow prompts better?

Not really. LLMs are trained on massive datasets, not on your specific rules. You can’t retrain them to obey your company’s style guide. That’s why prompt engineering exists: to guide the model in real time. The best way to improve compliance isn’t to change the model-it’s to change the prompt. Add structure. Add constraints. Add examples. That’s what forces the AI to follow your lead.

Do I need software to measure prompt metrics?

No. You can start with a simple spreadsheet. Score each prompt on clarity, coverage, and compliance using a 1-5 scale. Track which prompts work best. Over time, you’ll build a library of high-scoring templates. Tools like Google’s Vertex AI or Azure’s evaluation features help automate scoring-but they’re not required. Many teams get better results just by asking three questions before every request.

How do I know if my prompt is too complex?

If the prompt is longer than a paragraph, or if you’re using more than 5 constraints, it might be too complex. Overloading a prompt can confuse the AI. Instead of cramming everything in, break tasks into steps. Use a chain of prompts: one for research, one for summarizing, one for formatting. Simpler prompts are more reliable. And if you’re testing and still getting bad results, simplify further.

What’s the most common mistake teams make with prompts?

Assuming the AI knows what they mean. People write prompts like they’re talking to a coworker: "Hey, can you draft that email?" But AI doesn’t know your company culture, your tone, or your unspoken rules. The biggest mistake is leaving out context. Always answer: Who? What? Why? How? And What not to do?

- Feb, 12 2026

- Collin Pace

- 1

- Permalink

Written by Collin Pace

View all posts by: Collin Pace