Supply Chain Security for LLM Deployments: Securing Containers, Weights, and Dependencies

Why Your LLM Deployment Is More Vulnerable Than You Think

Most companies think securing their large language model (LLM) means locking down the API endpoint or filtering user inputs. That’s like locking your front door while leaving the back window wide open. The real risk isn’t the model itself-it’s everything that goes into building and running it: the containers, the weights, and the dozens of libraries it depends on. In 2025, supply chain attacks on AI systems are no longer theoretical. They’re happening. And they’re getting smarter.

According to OWASP’s 2025 Top 10 for LLM Applications, supply chain risks (LLM03:2025) are now the third most critical threat category. Why? Because an LLM isn’t just code. It’s a stack of pre-trained models, third-party plugins, container images, and Python packages-many pulled from public repositories with no oversight. A single compromised dependency can let attackers steal data, manipulate outputs, or turn your model into a backdoor.

Container Security: The First Line of Defense

Containers are the default runtime for LLMs today. Over 89% of enterprises use Docker or Kubernetes to deploy models because they’re fast, scalable, and easy to replicate. But that ease comes at a cost: most container images are built from unverified base images. Some are pulled from Docker Hub with no audit trail. Others include outdated OS packages with known CVEs.

Here’s what you need to do: never run a model in a container without scanning it first. Tools like Grype and Syft can automatically scan your container image and generate a Software Bill of Materials (SBOM) listing every package inside it. A 2025 Wiz Academy report found that 62% of public LLM container images contained at least one high-severity vulnerability. The fix? Use only trusted base images-like those from Google’s distroless or Red Hat’s UBI-and sign them with Cosign or Notary. Every container you deploy should have a cryptographic signature proving it came from your build pipeline, not some random GitHub repo.

Model Weights: The Hidden Treasure No One’s Protecting

Your LLM’s weights are its brain. They’re the trained parameters that determine how it answers questions, writes code, or summarizes documents. If someone tampers with them, your model can start generating biased, dangerous, or malicious outputs-without you even noticing.

Most teams download pretrained models from Hugging Face or other public hubs without verifying their integrity. That’s a huge red flag. A 2025 AppSecEngineer audit found only 32% of publicly available models include verifiable source information. That means you’re running code you can’t prove is safe.

The solution? Always verify model weights with cryptographic hashes. SHA-256 is the industry standard. Before loading any model, check its hash against the one published by the original creator. If you’re using a fine-tuned version, sign it yourself using tools like OpenSSL or Sigstore. Hugging Face’s integrated verification system scored 82/100 in OWASP’s 2025 assessment-far ahead of PyTorch and TensorFlow-because it enforces this practice by default. If your workflow doesn’t require hash verification, you’re not securing your weights. You’re just guessing.

Dependencies: The Silent Killers in Your ML Pipeline

Every LLM deployment pulls in dozens of dependencies: transformers, torch, numpy, fastapi, pydantic, and more. Each one is a potential attack surface. In 2024, attackers compromised a popular LoRA adapter on PyPI to inject backdoors into enterprise AI systems. The attack worked because no one checked if the library had been tampered with.

That’s why you need a Software Bill of Materials (SBOM). An SBOM is a detailed list of every component in your system-down to the version number. OWASP recommends CycloneDX as the preferred format for LLMs because it supports model-specific metadata like training data source and fine-tuning method. Tools like Dependency-Track or Sonatype Nexus can scan your SBOM and flag known vulnerabilities. Sonatype’s 2025 analysis of 2.1 million LLM components showed that automated SBOM scanning reduced deployment vulnerabilities by 76%.

But here’s the catch: 72% of users report false positives. Don’t ignore them. Tune your scanner’s thresholds. Focus on high-severity CVEs first. And never ignore a dependency that hasn’t been updated in over a year. Wiz’s 2025 incident report found that 57% of breaches stemmed from outdated transformers library versions. If it’s old, it’s risky.

Commercial Tools vs. Open Source: What Works Best?

You don’t have to build everything from scratch. Commercial tools like Sonatype Nexus and Cycode offer automated scanning, AI-powered threat detection, and integration with CI/CD pipelines. Sonatype’s platform identifies 23% more malicious components than traditional scanners by spotting patterns in open-source package behavior. Cycode’s LLM module detects anomalous model behavior in real time, like sudden changes in output patterns that signal poisoning.

But they’re expensive. Sonatype starts at $18,500/year. Cycode adds $12,000 to its base price. For small teams, that’s a hard sell.

Open-source tools like OWASP Dependency-Track and Grype are free. But they require setup. GitHub users report 35-40 hours of configuration per deployment. Documentation is sparse. Support is limited. Still, they work. One FinTech startup, AcmeAI, used open-source tools to catch a compromised LoRA adapter before it reached production-saving an estimated $2.3 million in potential breach costs.

Here’s the bottom line: if you’re deploying LLMs at scale, commercial tools are worth the cost. If you’re experimenting or building prototypes, open-source tools are enough-if you’re willing to invest the time.

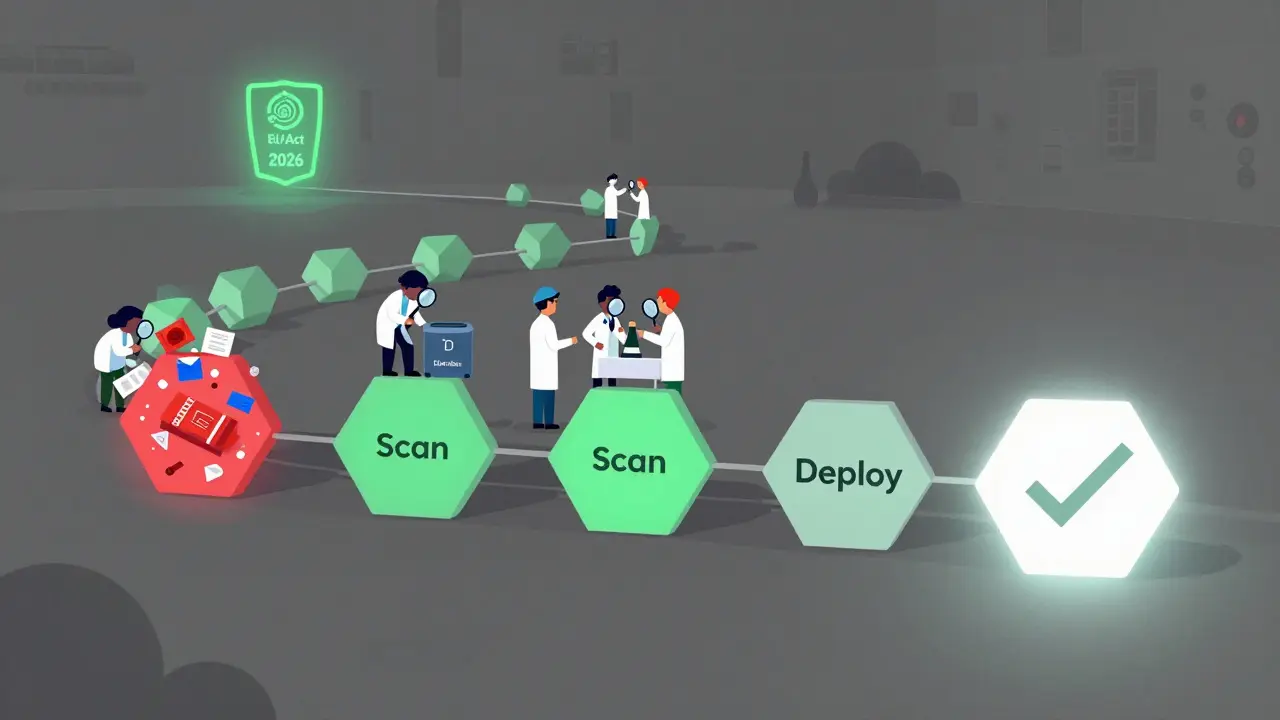

Real-World Implementation: A Step-by-Step Plan

Getting this right doesn’t require a team of 10 security engineers. Here’s what a practical setup looks like:

- Build an SBOM for every deployment using Syft or Grype. Save it as a CycloneDX JSON file.

- Scan the SBOM in your CI/CD pipeline. Fail the build if any high-severity CVEs are found.

- Sign your model weights with SHA-256 and store the hash in your artifact registry.

- Scan your containers before deployment. Use only signed, minimal base images.

- Enable attestations for every inference request. Log the model hash and runner version. Datadog customers who did this saw a 63% drop in compromise incidents.

- Update dependencies weekly. Automate patching with Renovate or Dependabot.

This process takes 6-8 weeks to fully integrate. But the cost of not doing it? Far higher. Gartner predicts supply chain security will account for 45% of enterprise LLM budgets by 2026-up from 28% in 2024. That’s not because it’s trendy. It’s because it’s necessary.

What’s Coming Next: AI-Native Security

The next wave of LLM security won’t be about scanning for known vulnerabilities. It’ll be about detecting anomalies in real time. OWASP is finalizing version 2.0 of the LLM Top 10, due in November 2025. It will include new requirements for monitoring model drift and dependency changes. NIST predicts that by 2027, 85% of enterprise LLM deployments will have automated validation at every stage of the ML lifecycle.

Regulations are catching up too. The EU AI Act requires demonstrable supply chain integrity for high-risk AI systems. The U.S. Executive Order 14110 mandates SBOMs for federal AI deployments by September 2026. If you’re not preparing now, you’ll be non-compliant by next year.

Final Thought: Security Isn’t Optional-It’s Infrastructure

LLM supply chain security isn’t a feature you add. It’s a layer of infrastructure you build from day one. Just like you wouldn’t deploy a web app without firewalls or encryption, you shouldn’t deploy an LLM without verifying its components. The tools exist. The standards are clear. The threats are real. Ignoring this isn’t risk management-it’s negligence.

What’s the biggest mistake companies make with LLM supply chain security?

They focus on securing the model interface while ignoring the 1,200+ dependencies, containers, and weights that make up the actual deployment. Most breaches happen through these hidden components, not through API exploits. The real blind spot isn’t the front door-it’s the entire supply chain behind it.

Can I use open-source tools for LLM supply chain security?

Yes, but only if you have the time and expertise. Tools like Grype, Syft, and Dependency-Track are free and effective. But they require manual setup, tuning, and ongoing maintenance. Most teams spend 35-40 hours configuring them per deployment. For enterprises, commercial tools save time and reduce risk-but open-source works fine for small teams or prototypes.

Do I need to sign every model I use from Hugging Face?

If you’re using a model in production, yes. Even if it’s from Hugging Face, you can’t assume it hasn’t been tampered with after upload. Always verify the SHA-256 hash of the model weights against the official source. If the model was fine-tuned by your team, sign it yourself. Trust, but verify.

How often should I scan for vulnerabilities in my LLM stack?

Every time you build or update your model. Automated scanning should be part of your CI/CD pipeline. Don’t wait for monthly audits. New vulnerabilities are discovered daily. A dependency that was safe last week might be compromised today. Continuous scanning isn’t optional-it’s the baseline.

Is container security really that important for LLMs?

Absolutely. Containers are the runtime environment for your model. If they’re compromised, attackers can access memory, steal API keys, or pivot to other systems in your network. Over 89% of enterprises use containers for LLMs, but 62% of public images contain high-severity vulnerabilities. Scanning and signing containers isn’t a luxury-it’s your first line of defense.

What’s the difference between SBOM and a dependency list?

A simple dependency list just says ‘we use transformers 4.35’. An SBOM (Software Bill of Materials) gives you the full picture: exact version, license, author, known vulnerabilities, and even the source URL. For LLMs, SBOMs like CycloneDX also include model-specific metadata-like training data source and fine-tuning method-which is critical for auditing and compliance.

- Jan, 16 2026

- Collin Pace

- 10

- Permalink

- Tags:

- LLM supply chain security

- container security for AI

- model weights integrity

- dependency management AI

- SBOM for LLMs

Written by Collin Pace

View all posts by: Collin Pace