Tag: LLM stability

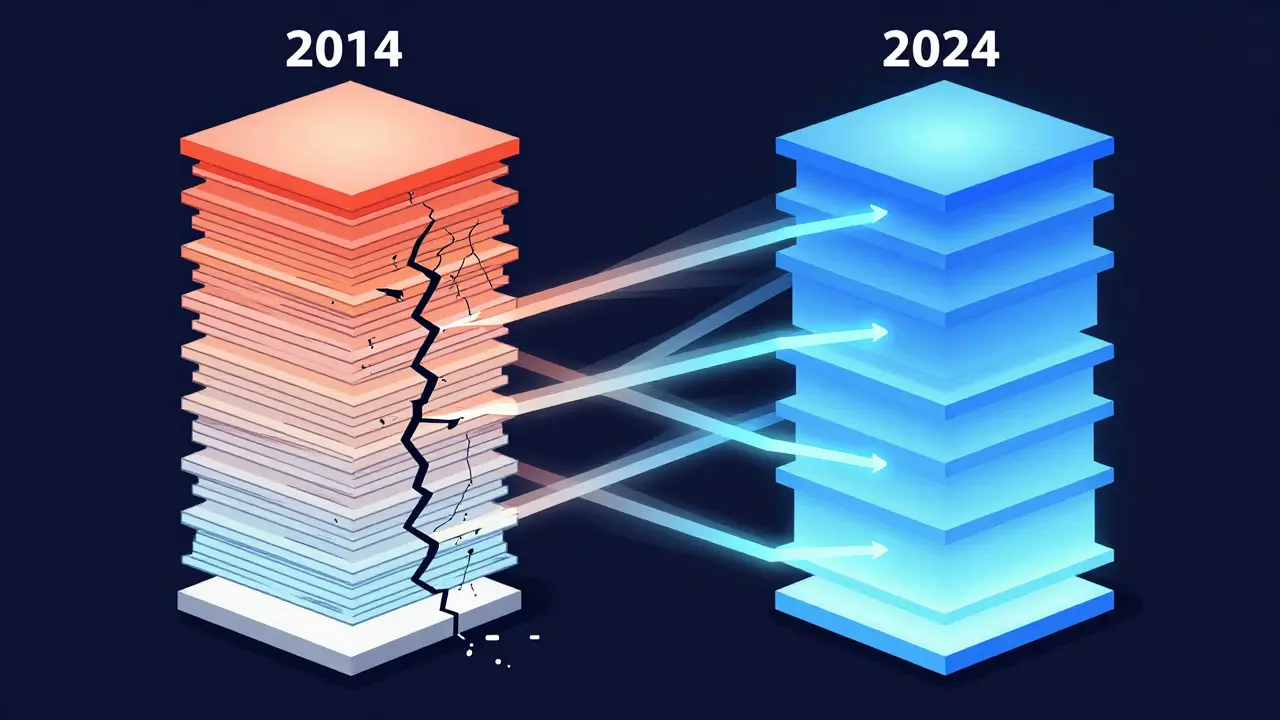

Transformer Pre-Norm vs Post-Norm Architectures: Which One Powers Modern LLMs?

Pre-Norm and Post-Norm are two ways to structure layer normalization in Transformers. Pre-Norm powers most modern LLMs because it trains stably at 100+ layers. Post-Norm works for small models but fails at scale.

- Oct 20, 2025

- Collin Pace

- 6

- Permalink