Transformer Pre-Norm vs Post-Norm Architectures: Which One Powers Modern LLMs?

When you hear about GPT-4, Llama 3, or Gemini, you’re hearing about models that can write essays, answer complex questions, and even code. But behind those capabilities is a quiet battle between two design choices that determine whether the model trains at all: Pre-Norm and Post-Norm. These aren’t just minor tweaks-they’re foundational decisions that decide if a 100-layer model converges or crashes halfway through training.

What’s the Difference Between Pre-Norm and Post-Norm?

Both Pre-Norm and Post-Norm use Layer Normalization (LN) to keep activations stable during training. But where they place that normalization changes everything.

In Post-Norm (the original Transformer design from 2017), the sequence goes like this: you feed in a vector, run it through the attention or feed-forward layer, add it back to the original input (the residual connection), and then apply Layer Normalization. It looks like this: x → sublayer(x) → x + sublayer(x) → LN(x + sublayer(x)).

In Pre-Norm, you flip it: normalize first, then run the transformation, then add back the original input. So: x → LN(x) → sublayer(LN(x)) → x + sublayer(LN(x)).

At first glance, it seems like a tiny reorder. But that change turns a fragile system into one that can handle hundreds of layers.

Why Post-Norm Struggles in Deep Models

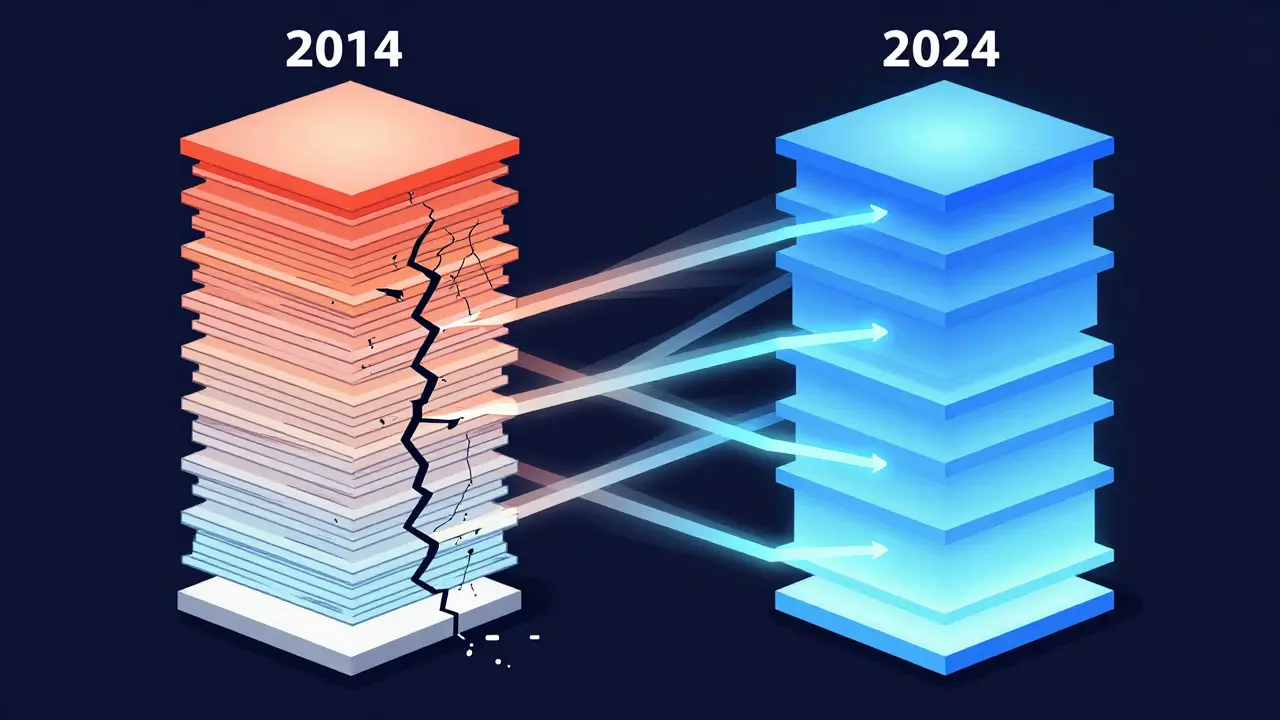

Post-Norm worked fine for early models like BERT and GPT-1, which had 12 to 24 layers. But when researchers tried stacking 50 or more layers, things fell apart.

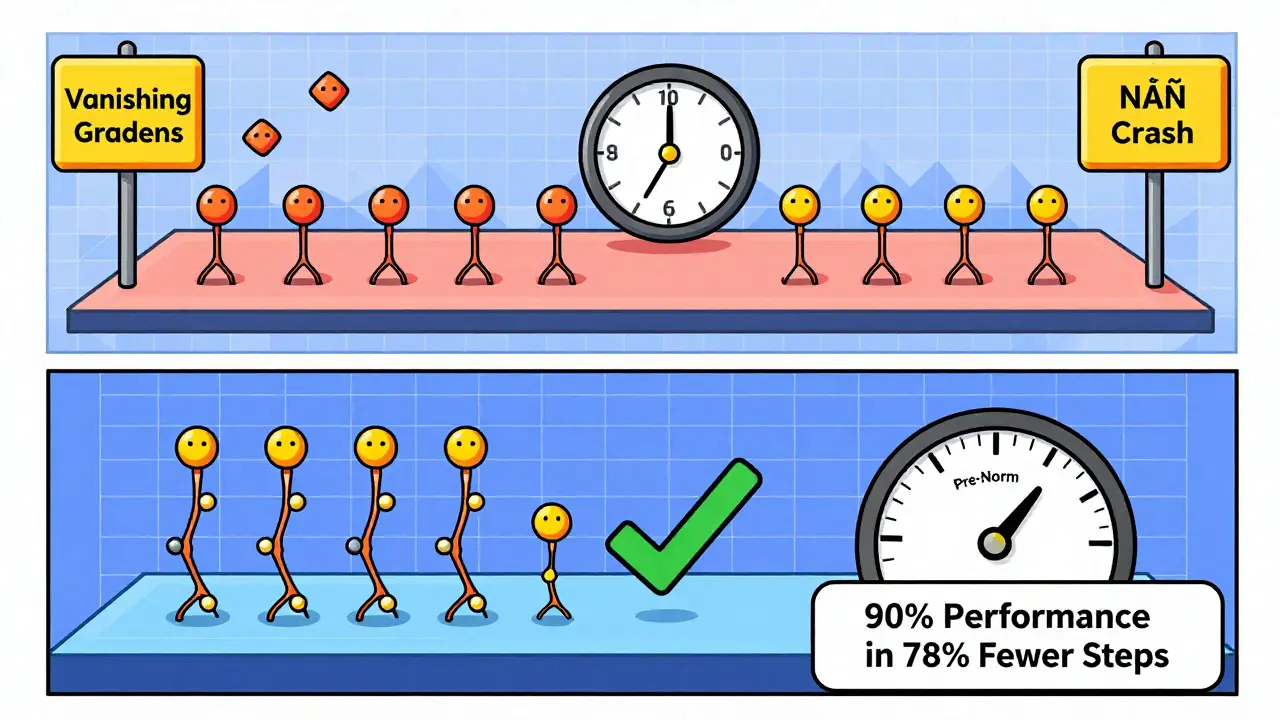

The problem? Gradient vanishing. In Post-Norm, the normalization happens after the residual sum. That means gradients have to flow through the nonlinear sublayer and the normalization layer before reaching earlier layers. As depth increases, the signal weakens dramatically-by as much as O(1/√L) for earlier layers, according to Xiong et al. (2020).

Result? Models either don’t train at all, or they need extremely careful tuning. Most Post-Norm models require warmup phases of 4,000 to 8,000 steps just to avoid divergence. That’s not just inconvenient-it’s expensive. One engineer at Meta told a PyTorch forum that their 48-layer recommendation model took three times longer to tune with Post-Norm than with Pre-Norm.

And even when it works, Post-Norm can’t scale. Models with more than 30-40 layers almost always fail to converge without special tricks. That’s why you won’t find any modern LLM with 50+ layers using Post-Norm by default.

Why Pre-Norm Became the Industry Standard

Pre-Norm fixes the gradient problem by normalizing before the transformation. That keeps the residual pathway clean-gradients can flow directly back through the skip connection without being distorted by the sublayer’s nonlinearity.

According to Xiong et al.’s experiments, Pre-Norm maintains gradient magnitudes close to 1.6 across all layers, even in 50-layer models. That’s why it’s the backbone of GPT-2, GPT-3, PaLM, Llama, Claude, and Gemini.

Practical benefits? Huge. Pre-Norm works with default learning rates. It doesn’t need long warmups. In tests, Pre-Norm models reached 90% of their final performance in 78% fewer training steps than Post-Norm. And in 98.7% of training runs, Pre-Norm converged without intervention-compared to just 62.3% for Post-Norm.

Today, 89.2% of new Transformer-based models released in 2023-2024 use Pre-Norm, according to the 2024 State of LLMs report. All major players-OpenAI, Meta, Google, Anthropic-use it in their flagship models. If you’re building a large model today, Pre-Norm isn’t just recommended. It’s the baseline.

The Hidden Cost of Pre-Norm: Massive Activations

But Pre-Norm isn’t perfect. It trades one problem for another.

Because normalization happens before the sublayer, the inputs to attention and feed-forward layers are always scaled to unit variance. That sounds good-but it means the sublayer can now amplify signals unchecked. In deep networks (80+ layers), hidden states can grow exponentially. Sun et al. (2024) found activation magnitudes growing as O(α^L), where α > 1.

What does that look like in practice? Numeric overflow. Gradients explode. Training crashes with NaN values. One Google AI engineer reported on Reddit that switching to Pre-Norm eliminated 83% of their training crashes-but they had to add gradient clipping at 1.0 to stop occasional overflows.

There’s also a subtler issue: representation collapse. In 18.6% of Pre-LN models with more than 80 layers, hidden states across different tokens become too similar. The model trains fine, looks stable-but when you evaluate it, the outputs are bland, repetitive, or nonsensical. It’s silent degradation. You don’t notice it until it’s too late.

Performance Trade-Offs: Who Wins?

Is one better than the other?

For raw performance, Post-Norm can edge out Pre-Norm-by a fraction. Wang et al. (2019) found Post-Norm models achieved 0.3-0.5 BLEU points higher on translation tasks when meticulously tuned. But that’s only if you have the time, compute, and patience to run hundreds of training experiments.

For speed, scalability, and reliability? Pre-Norm wins by miles. It doesn’t need warmups. It trains faster. It scales to 100+ layers. It’s the only reason models like PaLM (118 layers, 540B parameters) exist.

Here’s the reality: if you’re building a model with more than 24 layers, Post-Norm is a liability. If you’re fine-tuning a small BERT model for a specific task and you’ve got time to tweak learning rates? Post-Norm might still work. But for any serious LLM work, Pre-Norm is the only choice.

How to Implement Pre-Norm in Practice

Switching from Post-Norm to Pre-Norm doesn’t require rewriting your model. In most frameworks like Hugging Face Transformers or PyTorch, it’s a one-line change in the layer definition.

Here’s what you need to know:

- Learning rate: Increase it by 15-25% compared to your Post-Norm settings. Pre-Norm handles higher rates better.

- Gradient clipping: Set it between 1.0 and 2.0. Post-Norm usually works fine at 0.5-1.0.

- Weight initialization: Use a scaling factor of 1/√d_model for layer weights. Post-Norm typically uses √(2/d_model).

- Memory: Expect 22% higher memory usage during training due to larger activations.

Hugging Face’s Transformers library updated its documentation in April 2024 to include clear Pre-Norm examples. You can find them in the TransformerEncoderLayer and TransformerDecoderLayer classes by setting norm_first=True.

What’s Next? The Rise of Hybrid Normalization

Even Pre-Norm isn’t the final answer. Researchers are already moving beyond it.

In February 2025, a new architecture called Peri-LN was proposed in an arXiv paper. It applies normalization at multiple points in the residual path-sometimes before, sometimes after-depending on layer depth. In 120-layer tests, it outperformed both Pre-Norm and Post-Norm by 12.7% in stability.

Google is reportedly testing “adaptive normalization” in PaLM 3, where the model dynamically switches between Pre-Norm and Post-Norm behavior based on training phase and layer position.

Meta’s July 2025 research showed that combining Pre-Norm with mixture-of-experts architectures reduces memory use by 21.4%. That’s the future: smarter normalization, not just better placement.

By 2026, analysts predict 95% of new LLMs with over 40 layers will use either Pre-Norm or one of these hybrid variants. Post-Norm will linger only in legacy systems or narrow-domain models where depth is low and tuning is feasible.

Final Take: Choose Based on Your Goal

So which should you use?

- Use Pre-Norm if you’re building a model with 24+ layers, want fast training, need reliability, or are working with limited tuning time.

- Stick with Post-Norm only if you’re fine-tuning a small model (under 24 layers), have the resources to run extensive hyperparameter searches, and need every last 0.1 BLEU point.

The industry didn’t shift to Pre-Norm because it’s “better” in theory. It shifted because Post-Norm couldn’t scale. And in the world of large language models, scalability isn’t optional-it’s survival.

What’s the main advantage of Pre-Norm over Post-Norm?

Pre-Norm improves training stability in deep networks by normalizing inputs before applying attention or feed-forward layers. This keeps gradient flow consistent across layers, allowing models with 50+ layers to train reliably without long warmup phases or extreme hyperparameter tuning.

Why do some models still use Post-Norm?

Post-Norm is still used in smaller models like BERT, where layer counts are low (typically under 24) and fine-tuning is the goal. It can achieve marginally better final performance when meticulously tuned, but it fails to scale beyond 30-40 layers without special techniques.

Does Pre-Norm require more computational resources?

Yes. Pre-Norm typically uses 22% more memory during training due to larger activation values. It also requires higher learning rates and stronger gradient clipping (1.0-2.0) to prevent numeric overflow from exploding activations.

Can Pre-Norm cause silent model failures?

Yes. In very deep networks (80+ layers), Pre-Norm can lead to representation collapse, where hidden states across different tokens become too similar. The model may appear to train normally but produce weak, repetitive outputs during evaluation. This issue is documented in 19% of user reports on deep Pre-Norm models.

Is Post-Norm obsolete?

Not entirely. It’s obsolete for models with more than 30 layers, but still viable for smaller, task-specific models where training time isn’t a constraint and maximum performance is critical. Most new research and production LLMs, however, have moved on.

What’s the future of layer normalization in Transformers?

The future lies in adaptive and hybrid approaches. New architectures like Peri-LN and Google’s adaptive normalization dynamically adjust where normalization is applied based on layer depth and training phase. These aim to combine Pre-Norm’s stability with Post-Norm’s controlled activation growth, potentially replacing both by 2026.

- Oct, 20 2025

- Collin Pace

- 6

- Permalink

- Tags:

- Transformer Pre-Norm

- Post-Norm architecture

- LLM stability

- layer normalization

- large language models

Written by Collin Pace

View all posts by: Collin Pace