Archive: 2025/11

How to Reduce Memory Footprint for Hosting Multiple Large Language Models

Learn how to reduce memory footprint when hosting multiple large language models using quantization, model parallelism, and hybrid techniques. Cut costs by 65% and run 3-5 models on a single GPU.

- Nov 29, 2025

- Collin Pace

- 7

- Permalink

Citation and Attribution in RAG Outputs: How to Build Trustworthy LLM Responses

Citation and attribution in RAG systems are essential for trustworthy AI responses. Learn how to implement accurate, verifiable citations using real-world tools, data standards, and best practices from 2025 enterprise deployments.

- Nov 29, 2025

- Collin Pace

- 6

- Permalink

Designing Multimodal Generative AI Applications: Input Strategies and Output Formats

Multimodal generative AI lets apps understand and respond to text, images, audio, and video together. Learn how to design inputs that work, choose the right outputs, and use models like GPT-4o and Gemini effectively.

- Nov 24, 2025

- Collin Pace

- 7

- Permalink

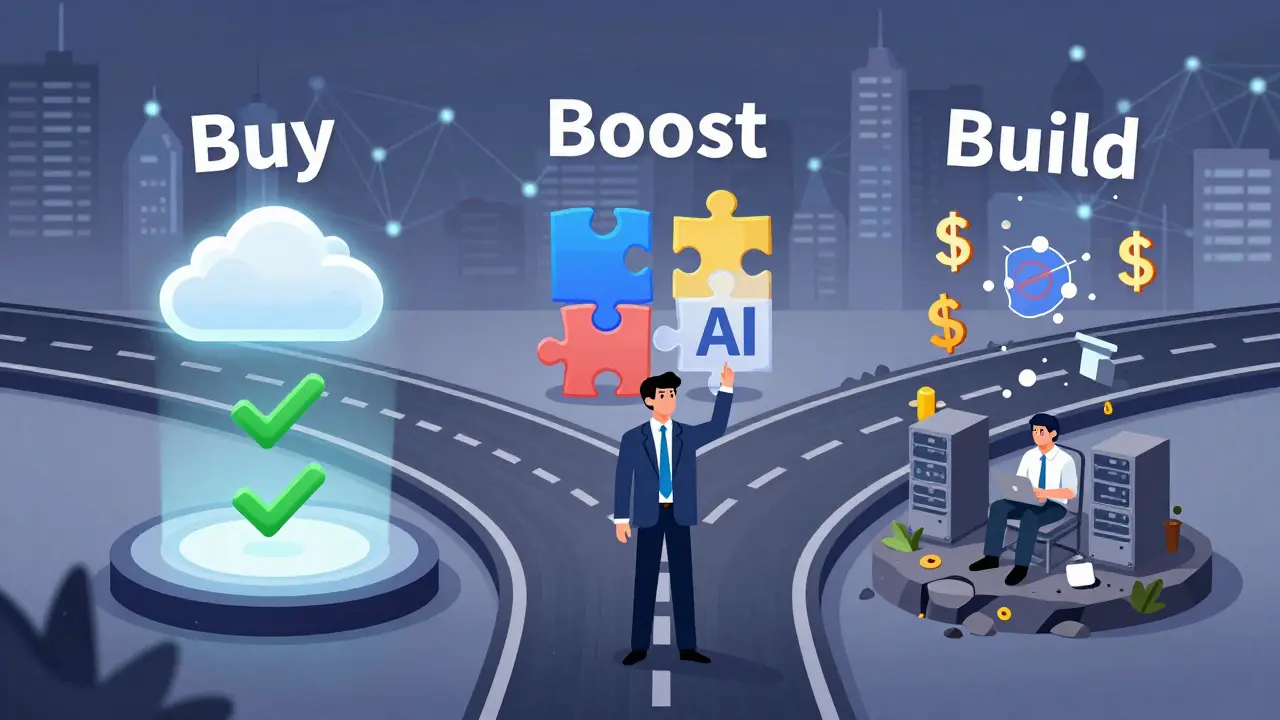

Build vs Buy for Generative AI Platforms: Decision Framework for CIOs

CIOs must choose between building or buying generative AI platforms based on cost, speed, risk, and use case. Learn the three strategies - buy, boost, build - and which one fits your organization.

- Nov 14, 2025

- Collin Pace

- 8

- Permalink