Contextual Representations in Large Language Models: How LLMs Understand Meaning

When you ask a language model what bank means, it doesn’t just pick one definition. It looks at the whole sentence. Is it "I deposited money at the bank" or "We sat on the river bank"? The difference isn’t in the word itself - it’s in what’s around it. That’s the core of contextual representations in large language models. Unlike older systems that treated every word like a fixed label, modern LLMs build meaning on the fly, using the words before and after to figure out what’s really being said.

How Context Changes Everything

Before transformers, models used static embeddings like Word2Vec or GloVe. These assigned each word a single vector - so "bank" always looked the same, whether it was about money or a river. That didn’t work well for real language, where words shift meaning constantly. A 2017 paper called "Attention is All You Need" changed that. It introduced the transformer architecture, which lets models weigh the importance of each word in a sentence dynamically. Suddenly, "bank" could mean one thing in one sentence and something totally different in the next - and the model knew which was which. This isn’t just about single words. It’s about entire conversations. If you say, "John went to the store. He bought milk," the model doesn’t just see "He" as a pronoun. It traces back to "John," remembers he’s the subject, and understands "He" refers to John. That’s called anaphora resolution. It’s happening across dozens or hundreds of words, in real time, without any explicit programming.The Mechanics Behind Understanding

At the heart of this are three key pieces: attention, embeddings, and layers. First, embeddings. Every word, or even part of a word, gets turned into a long list of numbers - a vector. GPT-3 uses 12,288 numbers for each token. That’s not random. These numbers encode meaning, grammar, tone, and even implied relationships. The model doesn’t "know" what a bank is - it knows the pattern of numbers that usually appear around financial institutions versus riverbanks. Then comes attention. The model doesn’t read left to right like a person. It looks at all words at once and assigns weights. If you say, "The cat chased the mouse because it was hungry," the attention mechanism figures out whether "it" refers to the cat or the mouse by checking which one is more likely to be hungry based on the whole sentence. It does this with multiple "heads," each focusing on different relationships - subject-verb, pronoun-reference, cause-effect. These attention scores feed into transformer layers. Modern LLMs have 24 to 128 of these layers. Each one refines the representation. The first layer might catch basic grammar. The tenth might track character roles. The 80th might infer intent. By the end, the model has built a rich, layered understanding of what’s being said - not just what’s written.Autoregressive vs. Masked: Two Ways to Learn Context

Not all LLMs learn context the same way. There are two main types: autoregressive and masked. Autoregressive models, like GPT-4 and Llama 3, predict the next word. They’re built for generation. When you type, "I love eating," they guess what comes next - "pizza," "ice cream," "salads." They only look backward. That makes them great for writing stories, replies, or code - anything that flows forward. Masked models, like BERT, do the opposite. They’re trained to fill in blanks. You give them: "I love eating [MASK]." They guess the missing word. But here’s the trick: they look at both sides. They see what’s before and after the blank. That makes them better at understanding questions, extracting facts, or judging sentiment. They’re less fluent in writing, but sharper at comprehension. This difference matters in practice. If you’re building a chatbot that needs to keep a conversation going, you want autoregressive. If you’re building a tool that reads legal docs and answers questions, you might prefer a masked model - or a hybrid.

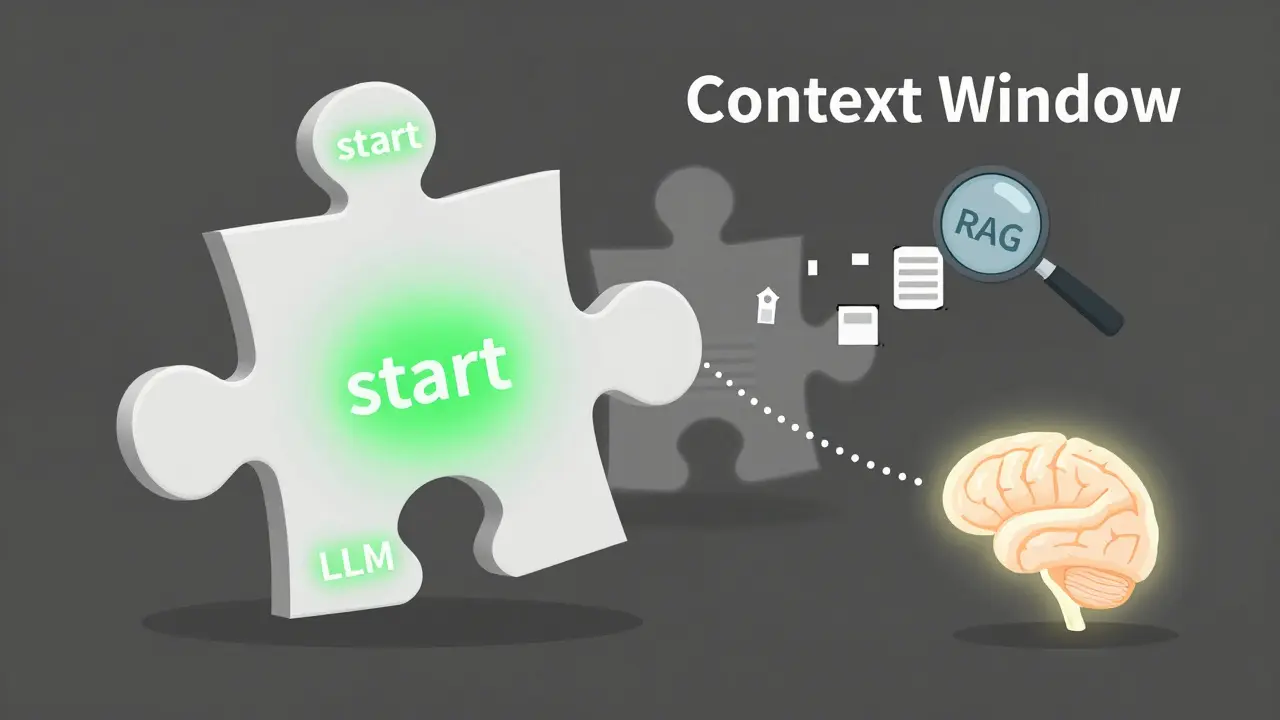

Context Windows: The Memory Limit

No matter how smart the model, it can’t remember everything. Every LLM has a context window - the maximum number of tokens (words or pieces of words) it can process at once. GPT-3.5 handles 4,096 tokens - about 3,000 words. GPT-4 Turbo goes up to 128,000. Claude 3 hits 200,000. That’s like reading a 600-page book in one go. But here’s the catch: performance drops if you push too far. Research shows models get worse at remembering details in the middle of long texts. It’s called the "lost-in-the-middle" problem. Information at the start or end gets more attention. The middle? Forgotten. That’s why enterprise users struggle. A 2023 survey found 68% of companies using LLMs for document analysis had to build custom chunking systems just to work around this. Legal teams, researchers, and developers all face the same problem: the model doesn’t "know" what’s outside its window. Everything beyond it? Doesn’t exist.What LLMs Really Understand - And What They Don’t

Here’s the big question: do LLMs understand meaning, or are they just really good at pattern matching? They can track character relationships in a 15-scene play. They can remember a user’s preferred tone across 20 chat turns. They can explain why "I saw her duck" means someone saw a bird, not someone lowering their head - based on context. That looks like understanding. But experts like Dr. Emily Bender warn: this isn’t grounded understanding. LLMs don’t experience the world. They’ve never felt hunger, seen a river, or paid a bill. They’ve seen trillions of sentences where "bank" was used in financial contexts. They learned the pattern. They didn’t learn the concept. That’s why they hallucinate. When given a long contract near their 200,000-token limit, Claude 3 might invent a clause that wasn’t there - because it’s predicting the most statistically likely continuation, not recalling what was written. It’s not lying. It’s just guessing.

Real-World Use and Pain Points

In practice, contextual understanding is a game-changer - but only if you work around its limits. Reddit users praise GPT-4 for keeping up with technical threads across 20+ messages. A developer asked about a Python error, followed up with a code snippet, then added a library version - and GPT-4 connected all three without being reminded. But on Trustpilot, users complain: "After 8 turns, it forgets what I said." That’s the context window biting back. Enterprise chatbots use summarization to reset memory every few exchanges. That helps - but it’s a band-aid. The fix? Retrieval-Augmented Generation (RAG). Instead of stuffing everything into the context window, you pull in only the relevant parts from a database. Need to answer a question about a 500-page manual? The system finds the 3 pages that matter, feeds them in, and answers from there. 65% of long-context LLM apps now use RAG.The Future: Bigger Windows, Smarter Attention

The race is on to expand context. Anthropic’s Claude 3, OpenAI’s GPT-4 Turbo, and Meta’s upcoming Llama 4 are all pushing past 100,000 tokens. Google’s Gemini 2.0 is rumored to have "adaptive context windows" - focusing more processing power on important parts of the text. There’s even research on "Ring Attention," a technique that could theoretically handle infinite context by splitting the text across processors. It’s still experimental. But if it works, we’ll stop thinking about context windows as limits - and start seeing them as tuning knobs. For now, the rule is simple: if your task needs memory beyond 8,000 tokens, plan for RAG, summarization, or chunking. Don’t rely on the model to remember. Build systems that help it remember.What You Need to Know

If you’re using LLMs today, here’s what matters:- Contextual understanding is real - but statistical, not human.

- Autoregressive models (GPT, Llama) are better for writing; masked models (BERT) are better for reading.

- Context windows aren’t infinite. GPT-3.5: 4K, GPT-4 Turbo: 128K, Claude 3: 200K.

- Information in the middle of long texts gets ignored. Use RAG or summarization to fix this.

- LLMs track relationships, tone, and intent - but only because they’ve seen it before, not because they understand it.

- For enterprise use, expect to spend 3-6 months learning prompt engineering and context management.

Contextual representations are what make modern LLMs feel alive. But they’re not magic. They’re math - complex, powerful, and still limited. The best users aren’t those who expect perfect understanding. They’re the ones who know how to work with the model’s strengths - and avoid its blind spots.

How do LLMs know what a word means in context?

LLMs use attention mechanisms to weigh the importance of surrounding words. Each word is converted into a high-dimensional vector, and the model calculates how much each nearby word influences the meaning. For example, in "I went to the bank," the model looks at "went," "to," and the verb structure to infer "bank" likely means a financial institution, not a riverbank. This happens across dozens of transformer layers, refining meaning step by step.

What’s the difference between GPT and BERT in handling context?

GPT predicts the next word, so it only looks backward - making it great for generating text. BERT fills in missing words and looks at both sides of the sentence - making it better for understanding questions or extracting facts. GPT is like writing a story; BERT is like answering a quiz.

Why do LLMs forget details in long conversations?

Because they have a fixed context window - the maximum number of tokens they can process at once. Beyond that, earlier text is dropped. Even with large windows (like 200,000 tokens), models pay less attention to information in the middle - a problem called "lost-in-the-middle." This is why chatbots often forget what you said after 8-10 turns. The solution is summarization or retrieval-augmented generation (RAG).

Can LLMs truly understand meaning like humans do?

No. LLMs don’t understand meaning the way humans do. They don’t have experiences, emotions, or real-world knowledge. They learn statistical patterns from massive text datasets. When they get "bank" right, it’s because they’ve seen it paired with "deposit" or "ATM" millions of times - not because they know what money is. Their understanding is pattern-based, not grounded.

What’s the best way to handle long documents with LLMs?

Use Retrieval-Augmented Generation (RAG). Instead of feeding the whole document into the model, break it into chunks. Use a vector database to find the most relevant sections based on your question. Feed only those into the model. This avoids context window limits and reduces hallucinations. Most enterprise LLM applications use this method today.

How big should a context window be for my use case?

For simple chatbots or short replies, 4K-8K tokens (like GPT-3.5) is enough. For legal documents, research papers, or multi-turn technical conversations, aim for 32K-128K tokens (GPT-4 Turbo or Claude 3). If you’re processing books or long reports, 200K tokens is ideal - but only if you combine it with RAG to avoid the "lost-in-the-middle" problem.

- Sep, 16 2025

- Collin Pace

- 0

- Permalink

- Tags:

- contextual representations

- LLMs

- large language models

- attention mechanism

- transformer architecture

Written by Collin Pace

View all posts by: Collin Pace