Tag: large language models

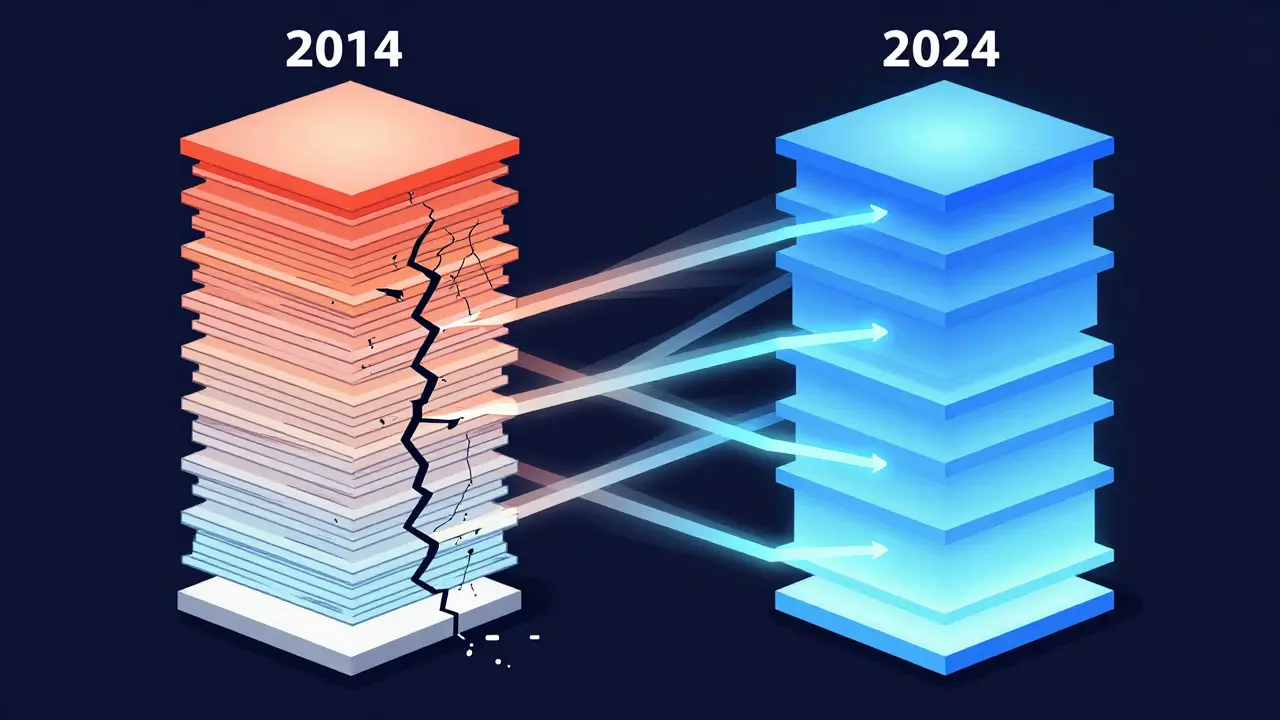

Transformer Pre-Norm vs Post-Norm Architectures: Which One Powers Modern LLMs?

Pre-Norm and Post-Norm are two ways to structure layer normalization in Transformers. Pre-Norm powers most modern LLMs because it trains stably at 100+ layers. Post-Norm works for small models but fails at scale.

- Oct 20, 2025

- Collin Pace

- 6

- Permalink

Contextual Representations in Large Language Models: How LLMs Understand Meaning

Contextual representations let LLMs understand words based on their surroundings, not fixed meanings. From attention mechanisms to context windows, here’s how models like GPT-4 and Claude 3 make sense of language - and where they still fall short.

- Sep 16, 2025

- Collin Pace

- 0

- Permalink

How to Use Large Language Models for Marketing, Ads, and SEO

Learn how to use large language models for marketing, ads, and SEO without falling into common traps like hallucinations or lost brand voice. Real strategies, real results.

- Sep 5, 2025

- Collin Pace

- 8

- Permalink