Category: Artificial Intelligence

Budgeting for Vibe Coding Platforms: Licenses, Models, and Cloud Costs

Vibe coding platforms promise to build apps with chat, but hidden costs in credits, tokens, and backend services can skyrocket your budget. Learn how much you really need to spend in 2026.

- Feb 8, 2026

- Collin Pace

- 1

- Permalink

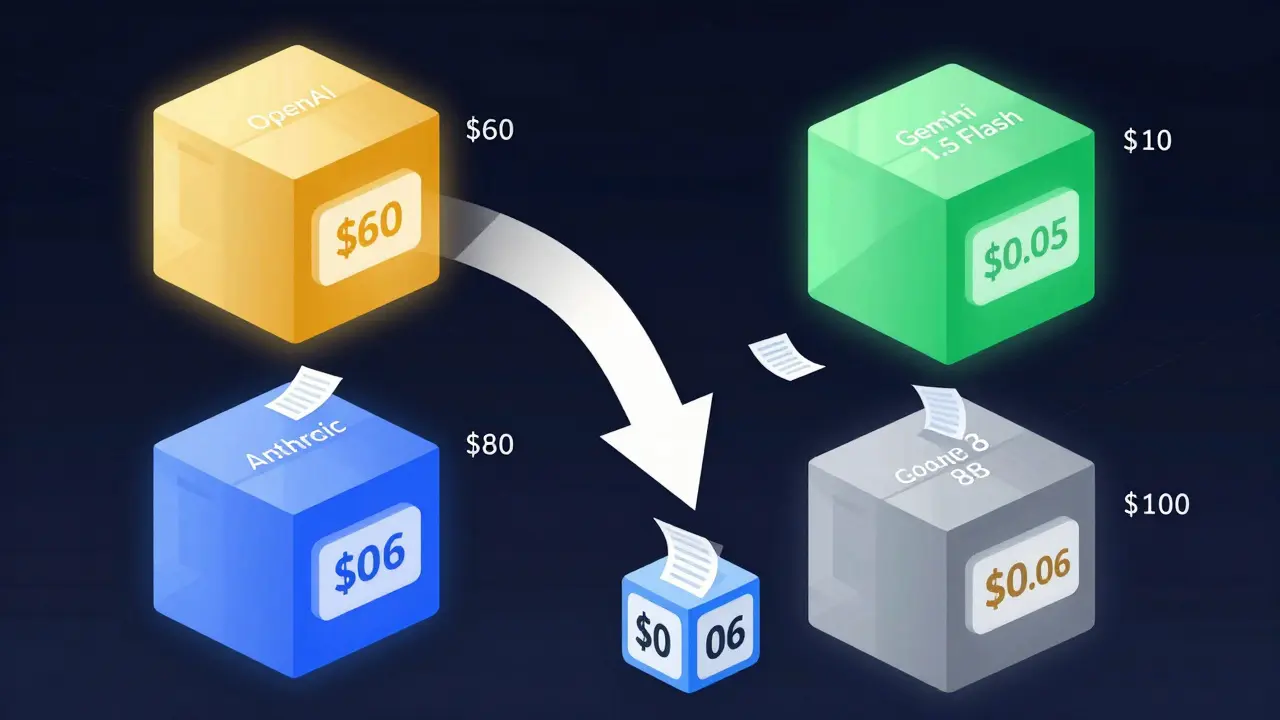

Comparing LLM Provider Prices: OpenAI, Anthropic, Google, and More in 2026

In 2026, LLM pricing has dropped 98% since 2023. Compare OpenAI, Anthropic, Google, and Meta's costs, context windows, and real-world performance to find the best value for your use case.

- Feb 7, 2026

- Collin Pace

- 1

- Permalink

Long-Context AI: How Memory and Persistent State Are Changing AI in 2026

Exploring how AI systems now handle millions of tokens of context, enabling persistent memory across interactions. Key breakthroughs in 2026 and real-world impacts for enterprises.

- Feb 6, 2026

- Collin Pace

- 4

- Permalink

v0, Firebase Studio, and AI Studio: How Cloud Platforms Support Vibe Coding

Firebase Studio, v0, and AI Studio are reshaping how developers build apps using natural language. Learn how each tool supports vibe coding and which one fits your workflow in 2026.

- Feb 2, 2026

- Collin Pace

- 7

- Permalink

Prompt Chaining vs Single-Shot Prompts: Designing Multi-Step LLM Workflows

Prompt chaining breaks complex AI tasks into sequential steps for higher accuracy, while single-shot prompts work best for simple tasks. Learn when and how to use each approach effectively.

- Feb 1, 2026

- Collin Pace

- 7

- Permalink

Chain-of-Thought Prompting in Generative AI: Master Step-by-Step Reasoning for Complex Tasks

Chain-of-thought prompting improves AI reasoning by making models show their work step by step. Learn how it boosts accuracy on math, logic, and decision tasks-and when it's worth the cost.

- Jan 29, 2026

- Collin Pace

- 7

- Permalink

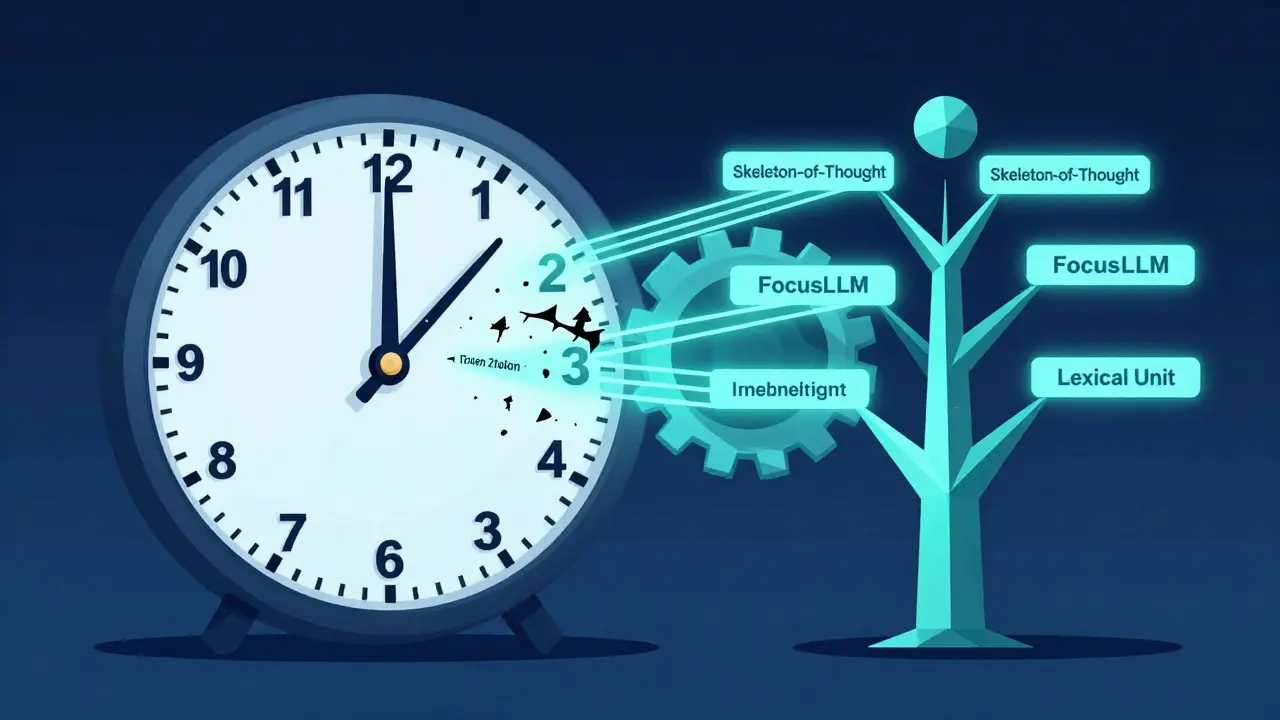

Parallel Transformer Decoding Strategies for Low-Latency LLM Responses

Parallel decoding strategies like Skeleton-of-Thought and FocusLLM cut LLM response times by up to 50% without losing quality. Learn how these techniques work and which one fits your use case.

- Jan 27, 2026

- Collin Pace

- 7

- Permalink

Low-Latency AI Coding Models: How Realtime Assistance Is Reshaping Developer Workflows

Low-latency AI coding models deliver real-time suggestions in IDEs with under 50ms delay, boosting productivity by 37% and restoring developer flow. Learn how Cursor, Tabnine, and others are reshaping coding in 2026.

- Jan 26, 2026

- Collin Pace

- 7

- Permalink

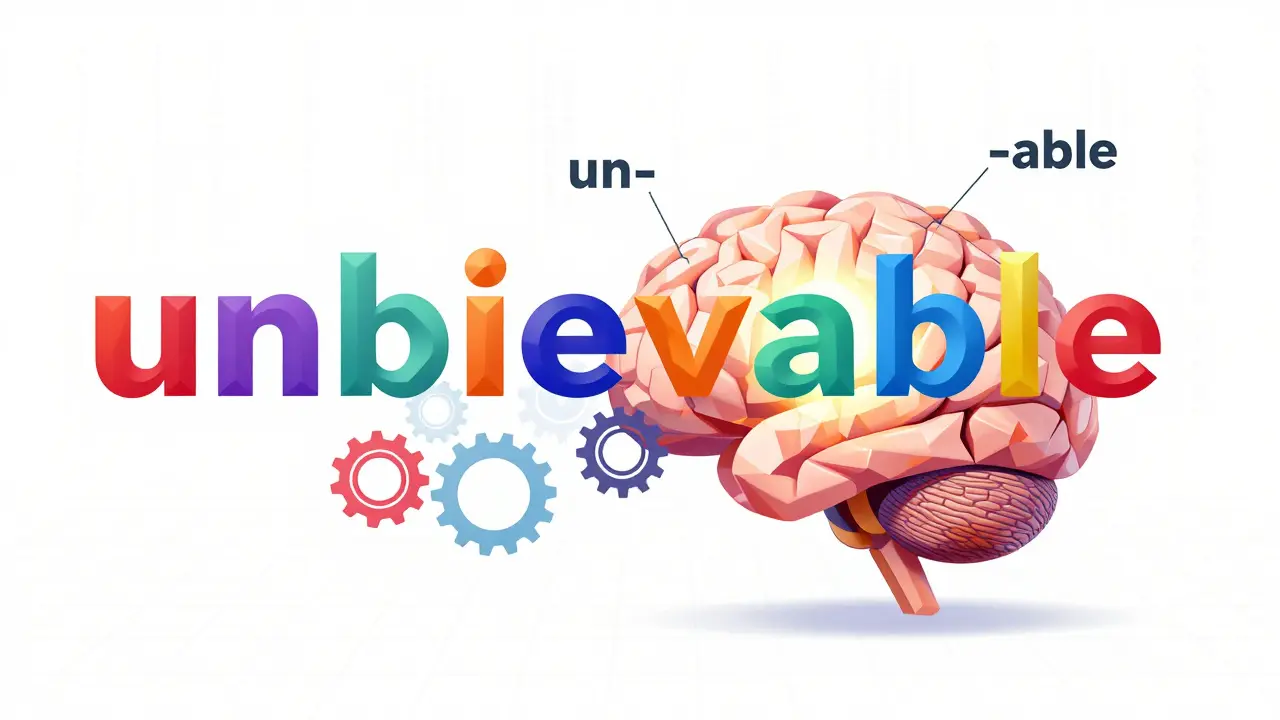

Understanding Tokenization Strategies for Large Language Models: BPE, WordPiece, and Unigram

Learn how BPE, WordPiece, and Unigram tokenization work in large language models, why they matter for performance and multilingual support, and how to choose the right one for your use case.

- Jan 24, 2026

- Collin Pace

- 5

- Permalink

Code Generation with Large Language Models: How Much Time You Really Save (and Where It Goes Wrong)

LLMs like GitHub Copilot can cut coding time by 55%-but only if you know how to catch their mistakes. Learn where AI helps, where it fails, and how to use it without introducing security flaws.

- Jan 22, 2026

- Collin Pace

- 7

- Permalink

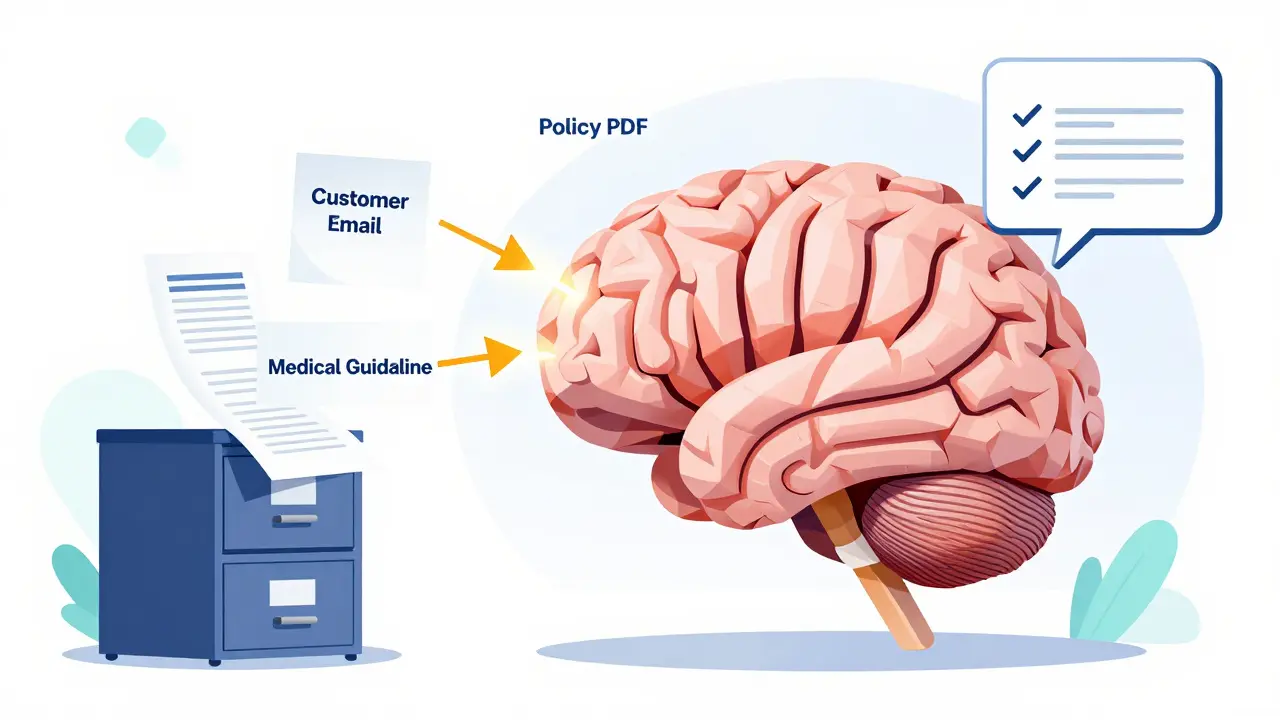

Search-Augmented Large Language Models: RAG Patterns That Improve Accuracy

RAG (Retrieval-Augmented Generation) boosts LLM accuracy by pulling real-time data from your documents. Discover how it works, why it beats fine-tuning, and the advanced patterns that cut errors by up to 70%.

- Jan 20, 2026

- Collin Pace

- 7

- Permalink

Value Capture from Agentic Generative AI: End-to-End Workflow Automation

Agentic generative AI is transforming enterprise workflows by autonomously executing end-to-end processes-from customer service to supply chain-cutting costs by 20-60% and boosting productivity without human intervention.

- Jan 19, 2026

- Collin Pace

- 10

- Permalink

- 1

- 2