Generative Innovation Hub - Page 2

How to Negotiate Enterprise Contracts with Large Language Model Providers

Learn how to negotiate enterprise contracts with large language model providers to avoid hidden costs, legal risks, and poor performance. Key clauses on accuracy, data security, and exit strategies are critical.

- Jan 25, 2026

- Collin Pace

- 6

- Permalink

Understanding Tokenization Strategies for Large Language Models: BPE, WordPiece, and Unigram

Learn how BPE, WordPiece, and Unigram tokenization work in large language models, why they matter for performance and multilingual support, and how to choose the right one for your use case.

- Jan 24, 2026

- Collin Pace

- 5

- Permalink

Cost Management for Large Language Models: Pricing Models and Token Budgets

Learn how to control LLM costs with token budgets, pricing models, and optimization tactics. Reduce spending by 30-50% without sacrificing performance using real-world strategies from 2026’s leading practices.

- Jan 23, 2026

- Collin Pace

- 9

- Permalink

Code Generation with Large Language Models: How Much Time You Really Save (and Where It Goes Wrong)

LLMs like GitHub Copilot can cut coding time by 55%-but only if you know how to catch their mistakes. Learn where AI helps, where it fails, and how to use it without introducing security flaws.

- Jan 22, 2026

- Collin Pace

- 7

- Permalink

Confidential Computing for Privacy-Preserving LLM Inference: How Secure AI Works Today

Confidential computing enables secure LLM inference by protecting data and model weights inside hardware-secured enclaves. Learn how AWS, Azure, and Google implement it, the real-world trade-offs, and why regulated industries are adopting it now.

- Jan 21, 2026

- Collin Pace

- 8

- Permalink

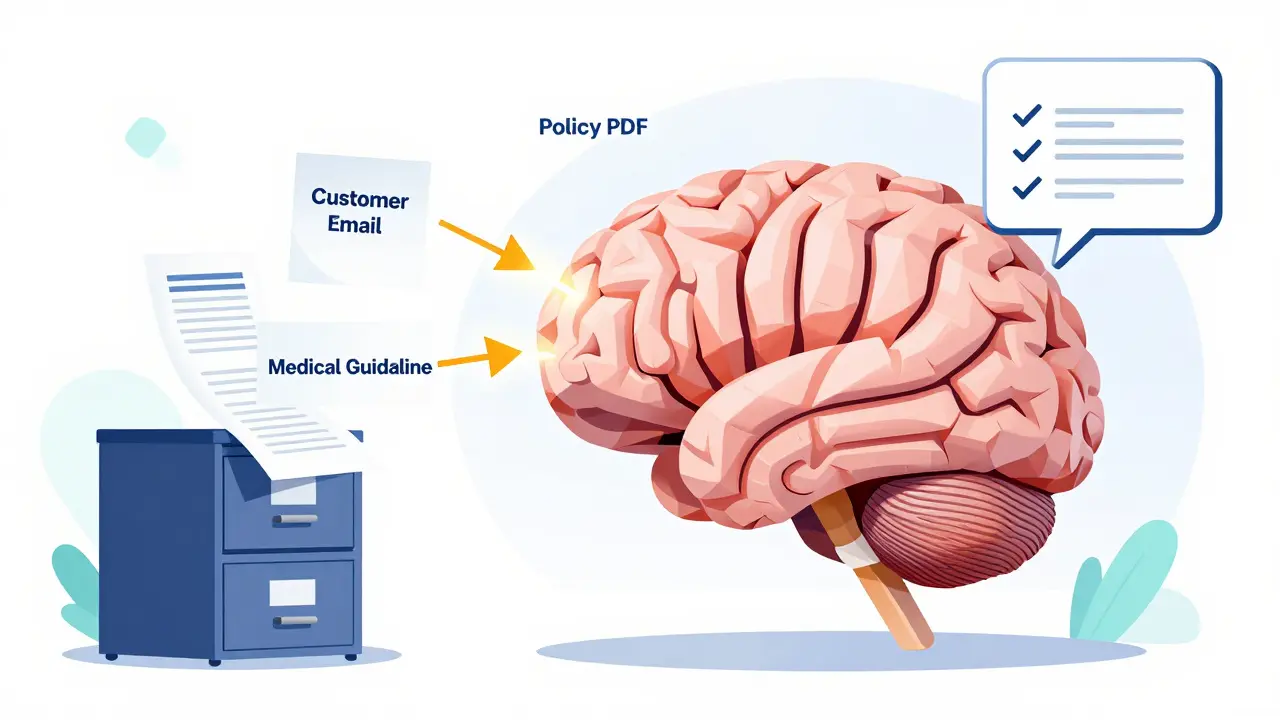

Search-Augmented Large Language Models: RAG Patterns That Improve Accuracy

RAG (Retrieval-Augmented Generation) boosts LLM accuracy by pulling real-time data from your documents. Discover how it works, why it beats fine-tuning, and the advanced patterns that cut errors by up to 70%.

- Jan 20, 2026

- Collin Pace

- 7

- Permalink

Value Capture from Agentic Generative AI: End-to-End Workflow Automation

Agentic generative AI is transforming enterprise workflows by autonomously executing end-to-end processes-from customer service to supply chain-cutting costs by 20-60% and boosting productivity without human intervention.

- Jan 19, 2026

- Collin Pace

- 10

- Permalink

Reusable Prompt Snippets for Common App Features in Vibe Coding

Reusable prompt snippets help developers save time by reusing tested AI instructions for common features like login forms, API calls, and data tables. Learn how to build, organize, and use them effectively with Vibe Coding tools.

- Jan 17, 2026

- Collin Pace

- 6

- Permalink

Supply Chain Security for LLM Deployments: Securing Containers, Weights, and Dependencies

LLM supply chain security is critical but often ignored. Learn how to secure containers, model weights, and dependencies to prevent breaches before they happen.

- Jan 16, 2026

- Collin Pace

- 10

- Permalink

Accuracy Tradeoffs in Compressed Large Language Models: What to Expect

Compressed LLMs save cost and speed but sacrifice accuracy in subtle, dangerous ways. Learn what really happens when you shrink a large language model-and how to avoid costly mistakes in production.

- Jan 14, 2026

- Collin Pace

- 9

- Permalink

How to Use Cursor for Multi-File AI Changes in Large Codebases

Learn how to use Cursor 2.0 for multi-file AI changes in large codebases, including best practices, limitations, step-by-step workflows, and how it compares to alternatives like GitHub Copilot and Aider.

- Jan 10, 2026

- Collin Pace

- 10

- Permalink

Long-Context Transformers for Large Language Models: How to Extend Windows Without Losing Accuracy

Long-context transformers let LLMs process huge documents without losing accuracy. Learn how attention optimizations like FlashAttention-2 and attention sinks beat drift, what models actually work, and where to use them - without wasting money or compute.

- Jan 9, 2026

- Collin Pace

- 5

- Permalink