Category: Artificial Intelligence - Page 2

Reusable Prompt Snippets for Common App Features in Vibe Coding

Reusable prompt snippets help developers save time by reusing tested AI instructions for common features like login forms, API calls, and data tables. Learn how to build, organize, and use them effectively with Vibe Coding tools.

- Jan 17, 2026

- Collin Pace

- 6

- Permalink

Accuracy Tradeoffs in Compressed Large Language Models: What to Expect

Compressed LLMs save cost and speed but sacrifice accuracy in subtle, dangerous ways. Learn what really happens when you shrink a large language model-and how to avoid costly mistakes in production.

- Jan 14, 2026

- Collin Pace

- 9

- Permalink

How to Use Cursor for Multi-File AI Changes in Large Codebases

Learn how to use Cursor 2.0 for multi-file AI changes in large codebases, including best practices, limitations, step-by-step workflows, and how it compares to alternatives like GitHub Copilot and Aider.

- Jan 10, 2026

- Collin Pace

- 10

- Permalink

Long-Context Transformers for Large Language Models: How to Extend Windows Without Losing Accuracy

Long-context transformers let LLMs process huge documents without losing accuracy. Learn how attention optimizations like FlashAttention-2 and attention sinks beat drift, what models actually work, and where to use them - without wasting money or compute.

- Jan 9, 2026

- Collin Pace

- 5

- Permalink

Evaluation Protocols for Compressed Large Language Models: What Works, What Doesn’t, and How to Get It Right

Compressed LLMs can look perfect on perplexity scores but fail in real use. Learn the three evaluation pillars-size, speed, substance-and the benchmarks (LLM-KICK, EleutherAI) that actually catch silent failures before deployment.

- Dec 8, 2025

- Collin Pace

- 10

- Permalink

Citation and Attribution in RAG Outputs: How to Build Trustworthy LLM Responses

Citation and attribution in RAG systems are essential for trustworthy AI responses. Learn how to implement accurate, verifiable citations using real-world tools, data standards, and best practices from 2025 enterprise deployments.

- Nov 29, 2025

- Collin Pace

- 6

- Permalink

Designing Multimodal Generative AI Applications: Input Strategies and Output Formats

Multimodal generative AI lets apps understand and respond to text, images, audio, and video together. Learn how to design inputs that work, choose the right outputs, and use models like GPT-4o and Gemini effectively.

- Nov 24, 2025

- Collin Pace

- 7

- Permalink

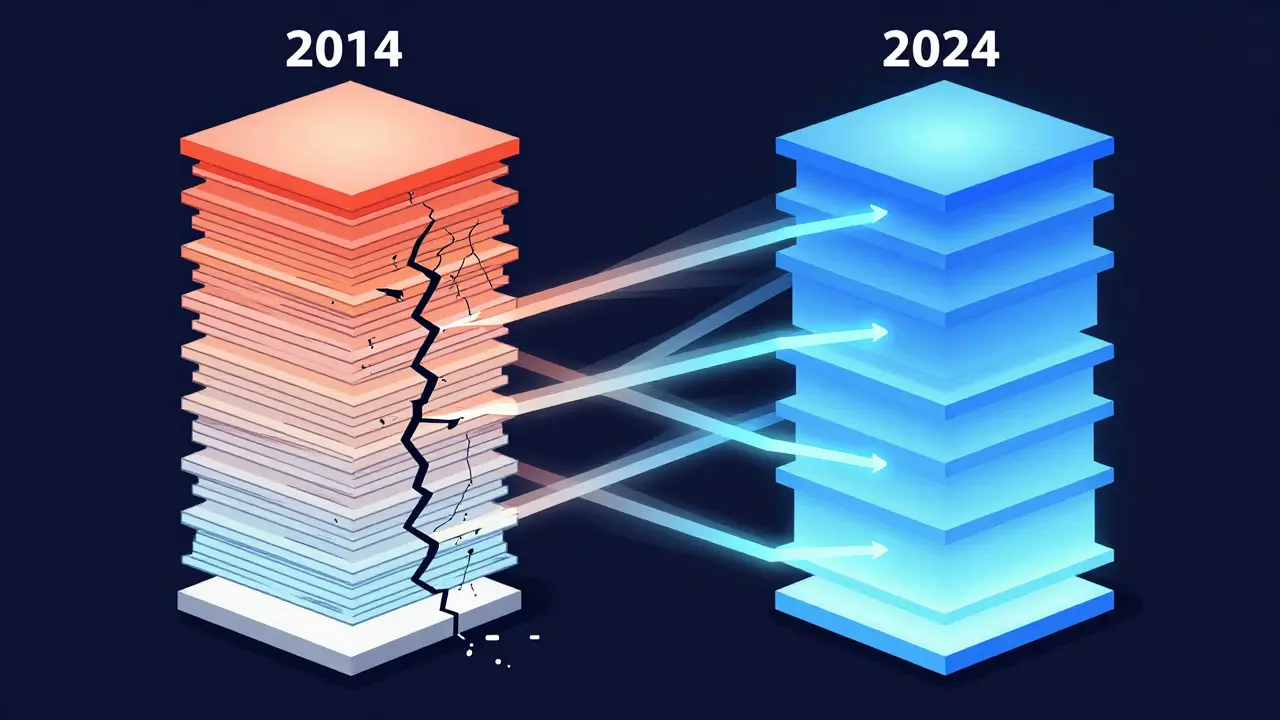

Transformer Pre-Norm vs Post-Norm Architectures: Which One Powers Modern LLMs?

Pre-Norm and Post-Norm are two ways to structure layer normalization in Transformers. Pre-Norm powers most modern LLMs because it trains stably at 100+ layers. Post-Norm works for small models but fails at scale.

- Oct 20, 2025

- Collin Pace

- 6

- Permalink

Contextual Representations in Large Language Models: How LLMs Understand Meaning

Contextual representations let LLMs understand words based on their surroundings, not fixed meanings. From attention mechanisms to context windows, here’s how models like GPT-4 and Claude 3 make sense of language - and where they still fall short.

- Sep 16, 2025

- Collin Pace

- 0

- Permalink

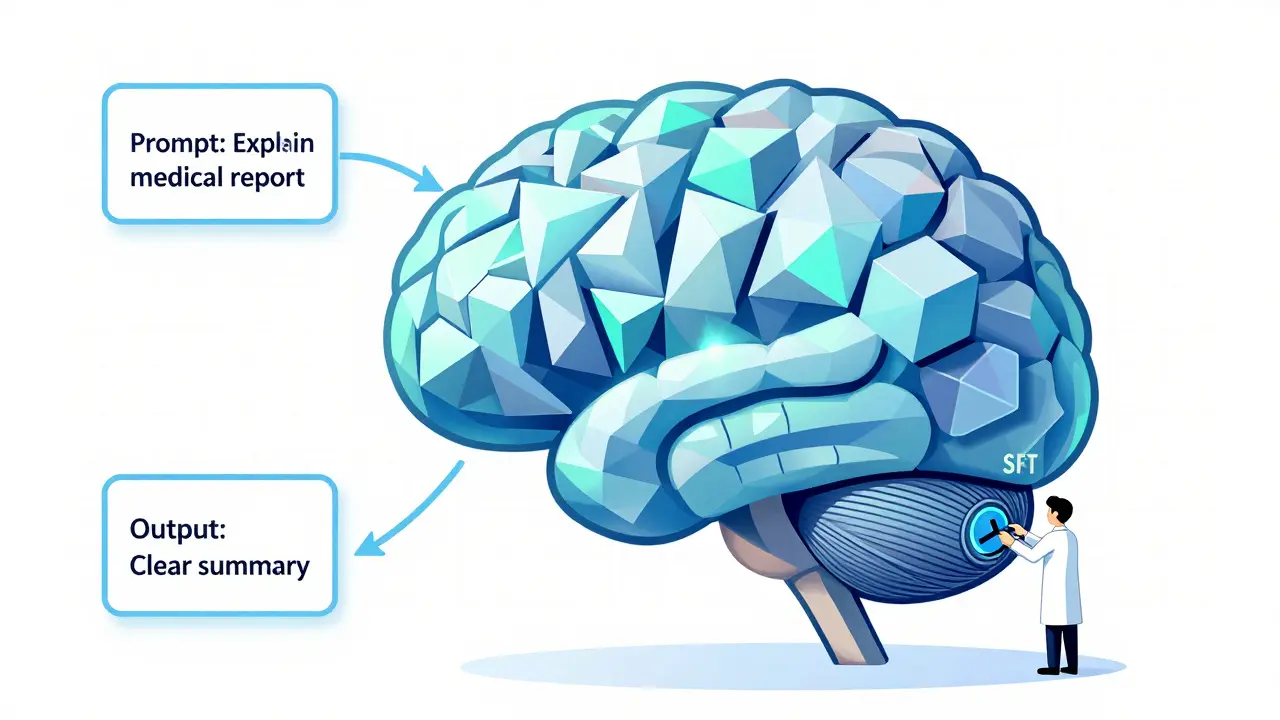

Supervised Fine-Tuning for Large Language Models: A Practical Guide for Real-World Use

Supervised fine-tuning turns generic LLMs into reliable tools using real examples. Learn how to do it right with minimal cost, avoid common mistakes, and get real results without needing an AI PhD.

- Jul 18, 2025

- Collin Pace

- 6

- Permalink

- 1

- 2